0 Introduction

With the continuous growth in the demand for electric energy transmission and the scale of electric power systems in China,the safety and stability of these systems are becoming increasingly crucial and have resulted in stringent requirements for their operational reliability.Inspection is a critical link in ensuring the safety and stability of electric power equipment.However,such equipment is widely distributed across facilities with complex arrangements.Furthermore,the natural environment surrounding substations or overhead lines,where some equipment is located,may be harsh and complex.Consequently,traditional manual inspection methods have low efficiency and high investment costs,and inspection in complex geographical environments may also pose safety risks to inspectors.

In recent years,unmanned aerial vehicles (UAVs) have become important tools for transmission line inspection owing to their small size,light weight,low price,ease of control,and flexibility.Since 2013,the State Grid Corporation has gradually replaced manual transmission line inspections with UAV inspections and increased the scale of UAV use.As of May 2022,18,000 UAVs are employed in inspection teams for transmission lines rated at and above 66 kV,and 2,900 UAVs are used for inspections of transmission lines rated at or below 35 kV.Although UAV inspections have reduced the workload and intensity of inspections,the inefficiencies and risk of visual fatigue that still remain in the manual inspection of collected images increase the probability of missed inspections.

Deep learning has achieved remarkable accomplishments in computer vision [1].The robust feature extraction capability of deep learning makes it suitable for handling UAV inspection images with intricate backgrounds,variable scenes,and diverse target features.Liu et al.[2] used a multilayer perceptron for transmission line,insulator,and anti-vibration hammer detection and constructed a semantic knowledge model for target recognition using the location association and local contour features of the detected objects.The model achieved an accuracy of 90.06%.Pan et al.[3] proposed a transmission line extraction method based on a convolutional neural network (CNN) and the Hough Transformation,which achieved an accuracy of 91.14%.To extract the transmission lines,an edge feature detector is first employed to generate a candidate target set and the CNN subsequently used to suppress background noise within the candidate target set.Line segment detection based on the Hough transformation is then performed to complete the extraction process.Ling et al.[4] used a faster region-based CNN (R-CNN) to detect insulators.The insulator region is then removed and a U-net network applied to detect insulator self-detonation defects.A recall of 95.5% and accuracy of 95.1% were achieved.

Despite the progress made in utilizing deep learning for processing electric power equipment inspection images,significant gaps still exist in its practical use.This is because,in most cases,the current models were developed under laboratory conditions for specific electric power equipment inspection tasks in particular scenarios.These models were developed to analyze sample images collected by overhead line drones during inspections of specific regions.The accuracy decreased significantly when the models were applied to other scenarios.In addition,the development of deep learning-based UAV inspection image processing models for specific scenarios is expensive and time-consuming.This approach therefore cannot satisfy the growing demand for image analysis and processing in UAV inspections of electric power equipment.

Automated machine learning (AutoML) is a technique for automating the construction and training of machine learning models.Examples include hyperparametric optimization [5] and meta-learning [6].Automated machine learning techniques can be used to find and train the optimal machine learning model automatically,thereby reducing the cost of model development significantly [7].Previous studies have investigated the application of automated machine learning modeling techniques to electric power tasks such as cable condition diagnosis [8],ultra-shortterm load forecasting [9] and solar power prediction [10].However,conventional automated machine learning methods are limited to laboratory scenarios with minimal data distribution changes.They cannot adapt to computer vision scenarios with complex and constantly evolving UAV inspection image processing models.To overcome this limitation,an automated deep learning system for analyzing and processing electric power UAV inspection images is proposed in this study.The primary contribution of this study is that it addresses the failure of traditional automated machine learning techniques to adapt to complex data distributions and handle difficult computer vision tasks,such as target detection and semantic segmentation in the processing of electric power UAV inspection images.The key contributions of this study are as follows:

(1) To address the weak adaptability of automated machine learning in electric power UAV inspection scenarios,we propose the three innovative design principles of generalizability,extensibility,and automation for such automated deep learning systems.

(2) We review the research on improving the generalizability,extensibility,and automation of models utilized for processing UAV inspection images.The advantages and disadvantages of each technique are also analyzed.Furthermore,a technical route for building an automated deep learning system is proposed with emphasis on improving the aforementioned areas.

(3) We present the system architecture of an automated deep learning system designed to analyze and process electric power UAV inspection images.We also built a prototype system and conducted experiments for the two UAV inspection image processing tasks of bird nest identification and insulator self-detonation detection.The modeling performance of the prototype system was evaluated using the mean average precision (mAP) values.mAP values exceeding 85% were achieved in both sets of experiments.

This organization of this paper as follows: In Section 1,the applications of electric power UAV intelligent inspection are introduced and the need for an automated deep learning system for analyzing and processing UAV inspection images highlighted.In Section 2,the three design principles of generalizability,extensibility,and automation for an automated deep learning system are introduced.In Section 3,advances in techniques for pre-trained models to fulfill the generalizability design principle are reviewed.In Section 4,the progress in fine-tuning (downstream task adaptation) to fulfill the extensibility design principle is reviewed.In Section 5,the progress in automated machine learning to fulfill the automation design principle is reviewed.In Section 6,the system architecture of an automated deep learning system for electric power UAV inspection image analysis and processing is described.

In Section 7,the experimental results from the prototype automated deep learning system for electric power UAV inspection image analysis and processing model are described.In Section 8,the applicable scenarios for the system are briefly discussed.The paper is finally concluded in Section 9 and the outlook for future research provided.

1 Application of UAVs in transmission line inspection

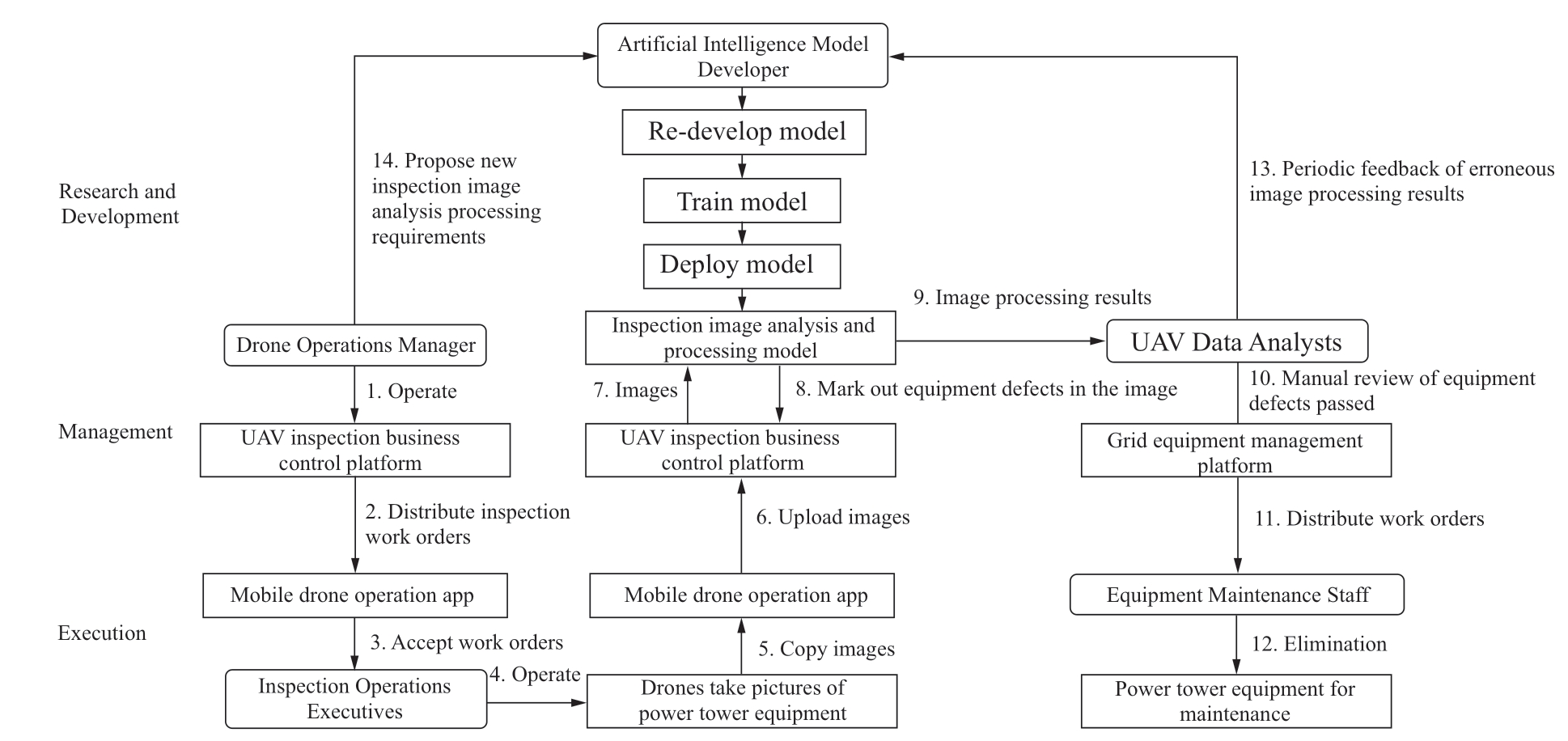

Figure 1 shows the workflow for electric power transmission line inspection using UAVs.The workflow begins when the manager operating the UAV control platform sends inspection orders to the mobile UAV operation app.The UAV pilots accept the work order,operate the UAVs,capture images of the electric power equipment,and upload the images to the UAV control platform using the mobile UAV operation app.

An inspection image analysis and processing model is then used to analyze equipment defects in the images and provide the results to UAV image data analysts for verification.After verification,work orders are sent to the electric equipment maintenance workers to perform repairs.The UAV managers and data analysts provide regular feedback on new inspection image analysis processing requirements and the model identification errors to the model developers who then redesign,retrain,and deploy new models.

Because AI models cannot be developed in blocks,unlike traditional software,the model developer must tailor the models to meet the specific requirements of the UAV managers and data analysts.This process can take between one and six months,which results in long-term defects and a high false alarm rate from the UAV image analysis /processing model.The high false alarm rate increases the workload of the electric equipment maintenance workers.

2 System design considerations

To address the issues of poor adaptability and slow iterative updates in the electric power UAV inspection processing model,an automated deep learning system is required to automate the processes of optimizing and updating the deep learning model.Using a large number of UAV inspection image samples,a high-performance UAV inspection image processing model can be customdeveloped to meet the requirements for electric power UAV inspection.This improves the utility of the model and reduces the workload of inspection workers.

Fig.1 Workflow of transmission line inspection using UAVs

Although deep learning models can be constructed using traditional automated machine learning techniques such as neural architecture search and hyperparameter optimization,these techniques work well only for specific tasks on publicly available datasets such as CIFAR10 and often perform poorly in scenario applications [11].Hence,they cannot be used directly to build automatic deep learning systems for UAV image processing.The poor performance of these techniques can be attributed to two reasons: First,compared to fixed datasets in open dataset tasks,the samples in the automatic deep learning system for UAV inspection image processing increase and are updated continuously owing to the introduction of new equipment in the inspection scene,the weather,environment,and other factors.This phenomenon is known as data drift.The learning ability of visual models built using AutoML is limited by the small numbers of parameters in the model,which leads to a continuous degradation of the model performance in actual scenes.Second,classical AutoML methods focus on only a single task,such as image classification or target recognition,and do not impose requirements on the computational resources required for model operation.However,UAV inspection image processing includes target detection and multiple other computer vision tasks,which constrains the ability of classical AutoML to automate these tasks.

These considerations motivate the three design principles of generalizability,extensibility,and automation in the automated deep learning system for electric power UAV inspection image analysis and processing.Generalizability means that the deep learning system should adapt to constantly updated and changing image data samples from the UAV inspection scene.Extensibility means that the system should be oriented toward multiple computer vision tasks in the inspection image processing scenario,and that the constructed model should be deployable on both the cloud and edge.Automation means that the system should not require human intervention to construct a UAV inspection image processing model.By fulfilling these design principles,the automated deep learning system can address the poor adaptability and slow iterative updates of current electric power UAV inspection processing models.

The most widely used and mature paradigm for building automated deep learning systems is the pre-training + finetuning paradigm [12].A typical pre-training + fine-tuning workflow is shown in Fig.3.A pre-trained model is first trained on a large amount of public data to obtain a model backbone network with a large parameter size.Using more data in the pretraining phase increases the a priori knowledge of the model,the number of backbone network parameters,and the generalizability of the model.

Fig.2 Pre-training and fine-tuning

Fig.3 Construction of large-scale pre-training model for electric power vision

In the fine-tuning stage (also called downstream task adaptation),the model backbone network is combined with multiple model heads to construct a customized model suitable for a specific computer vision task.Despite the large number of pretrained model parameters,it is possible to construct a smaller model suitable for the edge through knowledge distillation and pruning.Moreover,because the complexity of model structure design and retraining in the fine-tuning stage is relatively low,AutoML can be employed to replace manual labor and improve system automation.

With respect to the three design principles of generalizability,extensibility,and automation,the current statuses of pretraining,fine-tuning (downstream task adaptation),and automated machine learning techniques in the field of computer vision are reviewed in Sections 3,4,and 5,respectively.These techniques are crucial for ensuring the success of the pretraining + fine-tuning paradigm and enhancing the generalizability,extensibility,and automation in the system.

3 Pre-trained model techniques

The primary aim of pre-trained model techniques is to train a general model using a large amount of data and simple tasks so that the model can be fine-tuned efficiently for different downstream tasks.In the context of an automated deep learning system for electric power UAV inspection image analysis and processing,the use of pretrained models has the following advantages:

(1) Pre-trained model techniques require only a large volume of data samples with relatively low sample data and labeling quality requirements.This enables the system to maximize the use of massive image data generated during UAV inspection such as data pertaining to the poles,cables,insulators,gold tools,and lightning arrestors and environmental data,while also reducing the cost and time of manually selecting and labeling the images during the model training phase.

(2) The large number of parameters in pre-trained models contributes to their better generalizability [13],thereby allowing them to perform better in complex environments such as electric power UAV inspection tasks and adapt more efficiently to out-of-distribution image data.

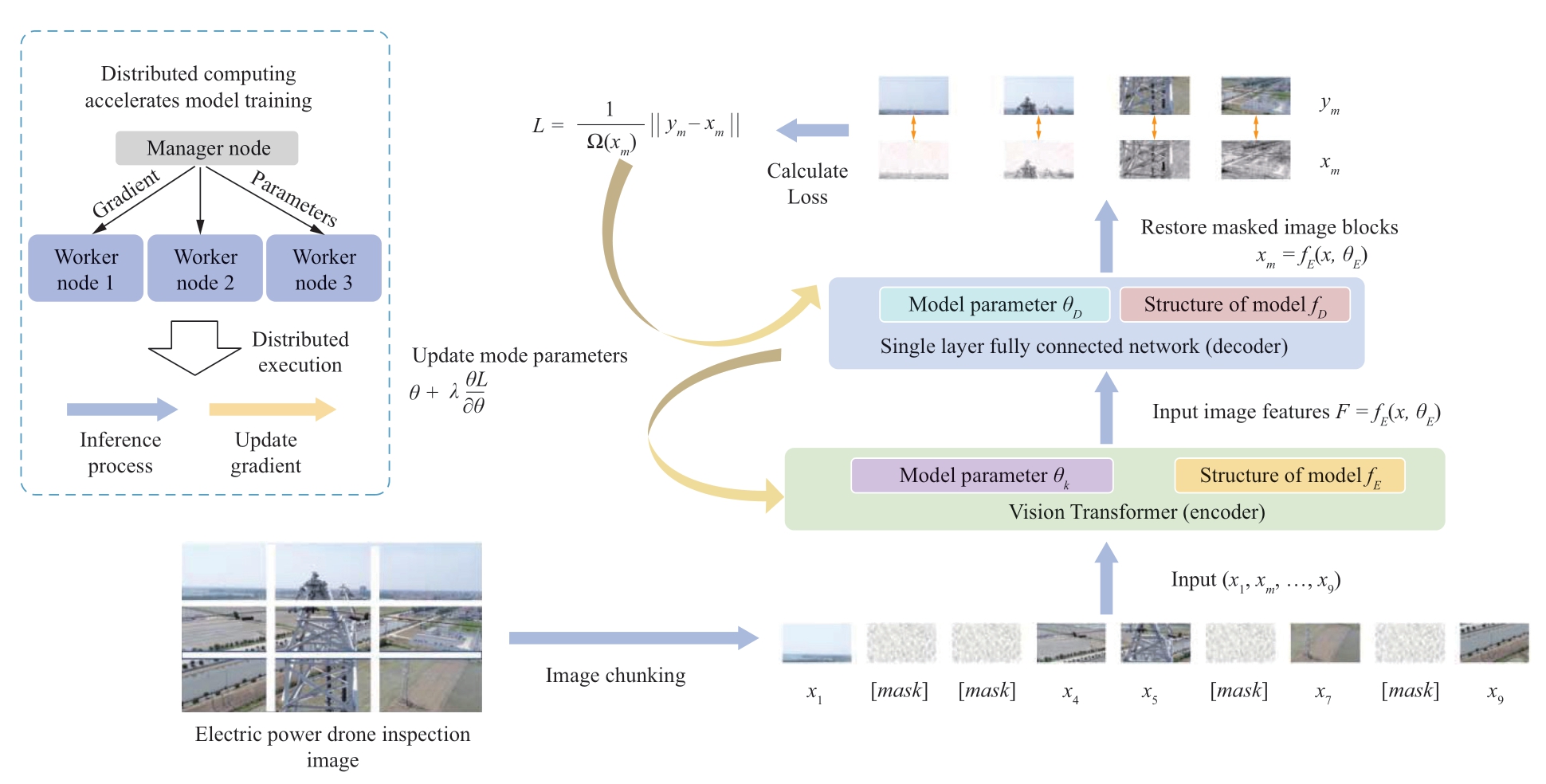

A flow diagram for constructing a vision-based model using pre-training techniques is shown in Fig.4.The process begins with the division of the electric power image samples into several blocks.A masking operation is randomly performed on approximately 40% of the image blocks.All the image blocks are then input into the Vision Transformer encoder to obtain the feature vectors.These feature vectors are then input into the decoder for decoding to restore the masked image blocks.The loss between the image blocks restored by the decoder and the actual image blocks is subsequently computed and the model parameters of the encoder and decoder updated using the loss value and the backpropagation algorithm.A distributed computing method is used to accelerate the model forward inference and training update processes,as indicated by the blue box in the upper left corner of Fig.4.

Fig.4 System architecture of automated deep learning system for power line inspection image analysis and processing

The process of constructing a vision-based model using pretraining techniques involves the three key factors comprising the network architecture of the pre-trained model [14],its learning paradigm [15],and model training acceleration [16].These factors are crucial in achieving the optimal performance,generalizability,and scalability of an automated deep learning system for electric power UAV inspection image analysis and processing.

3.1 Pre-trained model architecture

Computer vision models with deep CNNs as backbones have been widely used in electric power line inspection tasks [17].In 2014,the Visual Geometry Group team [18]proposed the VGG-Net backbone network,which features multiple convolutional kernel stacks.It significantly improved the spatial feature learning capability of boosted CNNs and was the first widely used pre-trained network framework.In 2016,He et al.[19] designed the residual learning mechanism,which utilizes residual block shortcut cross-layer connections,and proposed the ResNet network.In the ResNet network,the number of network layers is increased to up to 152 and the network is capable of multilevel feature extraction,making it the most widely used computer vision pre-training backbone network.

The focus in recent research on deep convolutional networks is on reducing the scale of the model parameters without compromising the model accuracy.This is because further increasing the number of layers in deep convolutional networks does not necessarily improve their feature extraction ability,which places a limit on the generalizability of deep convolutional networks as pretrained models.For instance,Tan et al.[20] proposed a balanced scaling method for the depth / width / resolution dimensions of deep CNNs and constructed the EfficientNet backbone network using a neural network architecture search technique.The EfficientNet backbone network improves the generalization ability of the model while reducing the scale of the model parameters.

However,there is a need for a backbone model with even stronger generalization ability to improve the accuracy of the electric power UAV inspection image processing model owing to the complexity of the image processing task.This challenge arises because this task involves massive and constantly updated UAV inspection image processing data.An adaptive and robust backbone model that can handle the data drift is therefore required.An advanced backbone network with enhanced generalization capabilities is also required to enable efficient feature extraction from complex and dynamic data in electric power UAV inspection tasks.

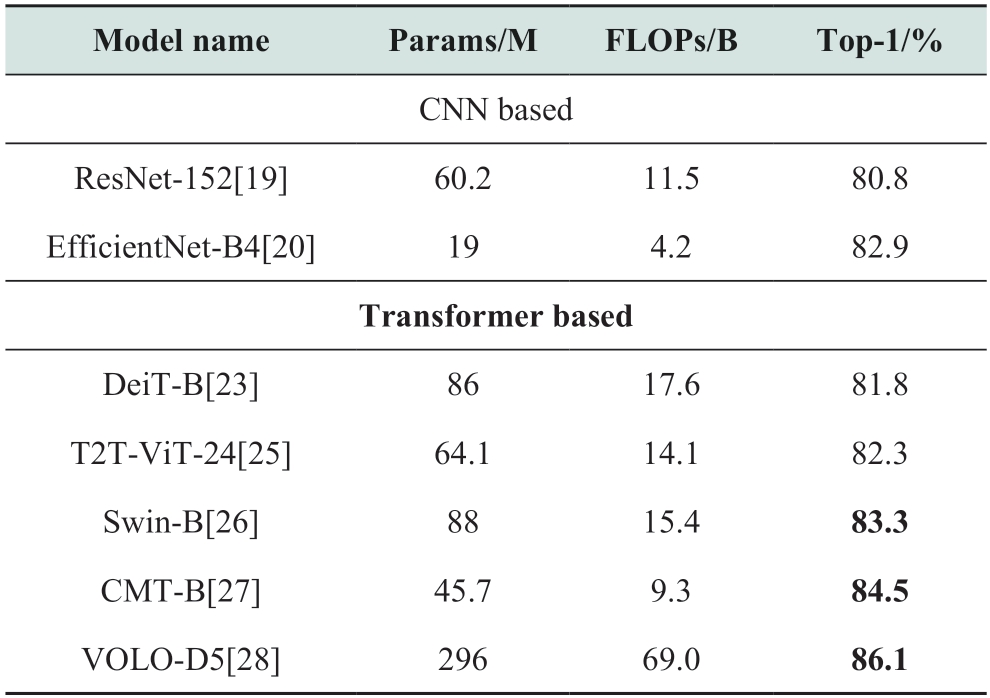

The Transformer neural network architecture [21]proposed by Vaswani et al.in 2017 has demonstrated greater generalization capability and performance in both natural language processing [22]1G0P and computer vision [23] compared to traditional models.Table 1 shows the performance of pre-trained Transformer models on the ImageNet public dataset [24],which is often used to evaluate the feature extraction capability of pre-trained models in computer vision.The performance of the Transformer model surpasses that of deep convolutional networks.The progress achieved in using the Transformer architecture as a pre-training network architecture is therefore focused on in this paper.

Table 1 Performance of pre-trained models on ImageNet

Dosovitskiy et al.[23] proposed the Vision Transformer(ViT) method,in which images are segmented into 16 × 16 image blocks and used as token inputs to the Transformer network architecture.This was the first time that the pure Transformer architecture is used in computer vision.Yuan et al.[25] further proposed the Transformers in Transformer(TNT) network,in which the Transformer structure is nested within a Transformer network structure.The model performance is improved through the decomposition of the image blocks by the TNT network into subimage blocks for processing,.Liu et al.[26] proposed the Swin-Transformer network in which a windowing mechanism is introduced into the Transformer architecture and a sliding window is used to improve the ability of the model to extract features from the overlapping parts of the image blocks.Guo et al.[27] combined the Transformer network structure with a deep convolutional network structure in which the Transformer network is used to capture long-range features and convolution is applied to understand local features.This approach improved the performance and accuracy of the model.

Yuan et al.[28] further proposed the Visual Outlieraware Attention (VOLO) network in which Outlook Attention,a new attention mechanism,is used to improve the extraction ability for image fine encoding compared to the traditional self-attention mechanism.This approach improved the accuracy of the model to 86.1%,which is 3.2% higher than that of the EfficientNet model.

In summary,the use of the Transformer architecture as a pre-training network architecture in computer vision has achieved significant progress and demonstrated greater generalizability compared to deep convolutional networks.The development of more advanced Transformer-based backbone models is a promising direction for improving the accuracy of automated deep learning systems for electric power UAV inspection image analysis and processing.

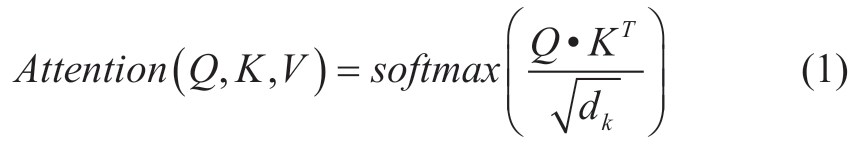

The special feature of the Transformer model is that a multi-headed self-attentive mechanism is used to transform the input into three vectors comprising the query vector q,key vector k,and value vector v.The q,k,and v vectors of different inputs are packed into the matrices Q,K,and V,respectively,and the feature values are calculated as

The multi-headed attention process begins with duplicating each of the Q,K,and V matrices into h copies so that the input becomes ![]() The image feature vector for the fused multi-location feature information (i.e.,head) is then obtained as

The image feature vector for the fused multi-location feature information (i.e.,head) is then obtained as

where

headi=Attention(Qi,Ki,Vi).

Compared to convolutional networks,the advantage of the self-attentive mechanism is that the location information of images is considered and targets can be identified based on the information around key targets.This makes the network more flexible and effective for tasks in which the target size and direction are not fixed.Further,the improved multi-headed attention mechanism enables the extraction of information from multiple locations by introducing multiple self-attentive mechanisms in a single neural network layer.This enables the network to use more than a single feature for judgment and improves its feature extraction ability under complex conditions,which in turn improves the performance of the model in complex scenarios such as electric power UAV inspection.

3.2 Learning paradigms

Learning paradigms for pre-trained model backbone networks can be classified into the two classes of supervised and self-supervised learning methods.Supervised learning is the most commonly used paradigm.In this paradigm,the model is trained on a large-scale public dataset such as ImageNet [24] to develop its feature extraction capability.The trained model backbone is later fine-tuned for downstream tasks such as electric power UAV inspection and image processing.However,owing to the high cost of data annotation in the electric power domain,there is currently no large-scale public dataset available.The performance improvement of electric power UAV image processing models is hence hindered by the lack of data in the electric power scenario for pre-trained models to perform supervised learning on.In contrast,the aim in selfsupervised learning is to utilize unlabeled data to improve the feature extraction capability of the model by designing auxiliary tasks that can exploit representative properties of the data itself as supervised information [29].Unlike supervised learning,self-supervised learning does not require labeling.A feature extraction capability similar to that of supervised learning can be achieved by the backbone of the pre-trained model through increasing the training data volume [30].Self-supervised learning is thus a more suitable learning paradigm for pre-trained models in the electric power domain.The focus of this paper is therefore on the progress of research related to self-supervised training methods.

Based on the proxy task objectives,self-supervised learning methods can be classified into three categories of generation,contraction,and generation-contraction methods[31].Each of these categories involves the development of different proxy tasks to utilize the unlabeled data effectively.Self-supervised learning has become an essential aspect of pre-trained models for electric power UAV inspection image processing.It enables the development of more advanced automated deep learning systems by improving the feature extraction capability of the models.

Generation-based methods: Generation-based selfsupervised learning methods involve training an encoder that can encode the input image as a feature vector and a decoder that can decode the feature vector to reconstruct the input image.Large-scale pre-trained models trained using self-supervised learning methods include autoregressive models such as Pixel-RNN [32] and WaveNet [33],as well as self-coding models such as BERT [22],MAE [30],and VQ-VAE [34].MAE is used as an example in the following to illustrate generation-based self-supervised learning.

For a high-dimensional data set x=(x1,…,xn) and an unlabeled dataset X,a permutation π is construced from the set of natural numbers [1,n].The probability density p(x)of this data set in the autoregressive model can then be expressed as

Because the focus of this paper is on models for images,the rasterized construction is chosen,i.e.,the alignment πi=i,for1≤i≤n.The generative class model is then trained by minimizing the image data great likelihood function,which is also referred to as the autoregressive loss function:

BERT can also be used [22].In MAE [30] and other models,a mask loss function is employed by constructing a natural number set [1,n] of subsets M⊂[1,n] where each element in the set of natural numbers has an independent probability of 0.15 to appear in the subset M,which we call the mask.The conditional probability x[1,n]\M is then minimized.The model is trained by minimizing the loss of the maximum likelihood function under the masked part:

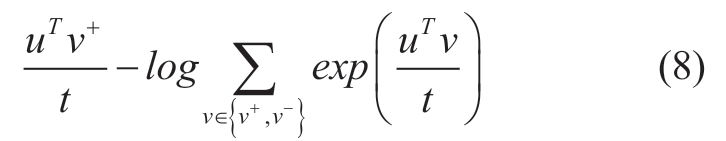

Contract-based methods: In context-based selfsupervised learning methods,the encoder is trained to encode input images as feature vectors with the goal of outputting similar feature vectors for similar input images.Typical context-based self-supervised learning methods include CPC [35] and MOCO [36].In the following,we use the context-based SimCLR [37] as an example to introduce context-based self-supervised learning methods.

In the SimCLR method,N samples from the unlabeled dataset are first selected and a copy for each sample is generated using data augmentation to obtain 2N data samples.During training,we treat the samples and their appendices as positive examples.To simplify the formula writing,we introduce l2 and define sim(u,v) as the regularized dot product of the samples u and v:

The loss function for the positive case li,j is then defined as

where L[k≠i]∈{0,1} is an indicator function that takes the value of 1 when k≠i at the time and t is the temperature coefficient.Similarly,the loss function for the negative examples can be expressed as

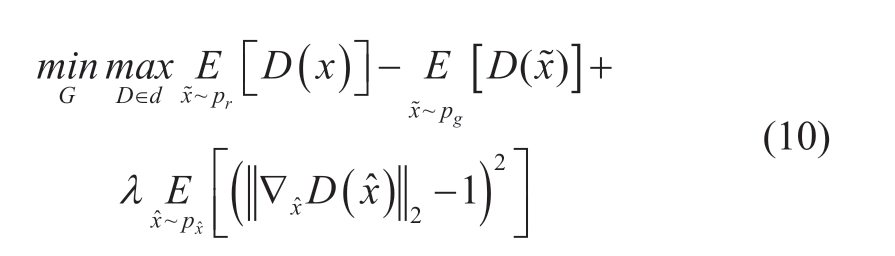

Generation-contrast-based methods: In generationcontrast-based self-supervised learning methods,an encoder-decoder is trained to generate spurious samples and a discriminator trained to distinguish spurious samples from the actual samples by using the discriminative loss as the training target.A typical class of generative-contrast neural networks is generative adversarial networks (GANs [38]).

WGAN-GP [39] is used as an example to introduce the loss function in generative-contrast neural networks.The generative adversarial network consists of a generator G(·) and a discriminator D(·).The generator generates spurious samples x~=G(z) based on the random noise z.The discriminator is trained to evaluate the original samples x and the generated samples x~ using the following loss function:

In WGAN-GP,the convergence and speed of the model training process are improved by adding a gradient penalty regular term to the WGAN loss function:

where  is the randomly generated sample.

is the randomly generated sample.

Table 2 shows the accuracy of pre-trained models based on supervised and self-supervised methods fine-tuned for the CoCo dataset.The results indicate that Transformer models pre-trained using self-supervised learning methods outperform those pre-trained using supervised learning methods and deep CNN backbones,even after fine-tuning for the target detection task.

Table 2 Performance of models pre-trained using different learning paradigms after fine-tuning on CoCo

In the field of electric power research,Chen et al.[42]significantly improved the accuracy and speed of electric power line identification using the generation-based selfsupervised learning method IBS,whereas Wang et al.[43] improved the average accuracy of glass insulator defect detection to 84.6% using an improved generative adversarial network.In general,because current generative class methods impose less restrictions on the type and size of unlabeled data,they are more suitable for building largescale pre-trained electric power vision models.

3.3 Model training acceleration

Although the use of self-supervised learning and Transformer architectures has enhanced the feature extraction ability of models,this improvement comes at the cost of a significant increase in the number of parameters.While the BERT model released in October 2018 [22] has 340 million parameters,the parameter size of the cross-modal DALL-E model launched in January 2021 is 12 billion [44].Such a massive number of parameters prevents large-scale pretrained models from being trained on a single server.The use of distributed computing is therefore inevitable in the development of completely trained large-scale pretrained models for electric power vision applications.The current distributed model training system architectures can be divided into the two main categories of the parameter server [45] and the ring All-Reduce [46] models.

The parameter server model comprises the two main components of a central parameter server and worker nodes.In this model,the worker nodes are primarily responsible for forwarding inferences and computing gradients using GPU computing resources while the central parameter server is responsible for updating the model parameters by utilizing the computed gradients from the worker nodes.In comparison,in the ring All-Reduce distributed computing architecture,all the computing nodes are equivalent and the gradient computation results are synchronized through broadcasting,which offers superior performance.However,the scalability of this approach is limited.To address this,data [47],pipeline [48],and vector [49] on top of the distributed computing architecture in automated data model building systems.These techniques facilitate the training of not only large but also immense electric power vision models while saving time and resources.

4 Fine-tuning techniques (downstream task adaptation)

After pre-training,the model is adapted to the downstream task in the fine tuning stage.This stage comprises the two critical aspects of downstream task adaptation and computational resource adaptation.The first aspect involves adapting the model to the requirements of the downstream task,whereas the second involves accommodating the computation and storage resource requirements of the model to the limitations of the environment the model is run in.The focus in this study is on transfer learning,which addresses the problems of downstream task and data adaptation.The advancements in knowledge distillation and its applications in handling the computation environment challenges in the electric power field are also reviewed.

4.1 Deep transfer learning

Transfer learning refers to the technique of using a model designed to solve one problem to solve a different problem,whereas deep transfer learning [50] involves applying a deep learning model aimed at tackling a particular problem to resolve a different problem.There are four important concepts in transfer learning comprising the source domain Ds,target domain DT source learning task TS,and target learning task TD.We take UAV transmission line inspection as an example to illustrate these concepts.In this context,the source domain Ds is a large number of unlabeled image samples obtained from the public dataset for pre-training the vision model; the target domain DT is the inspection image sample set annotated by electric power experts used to train the UAV image processing model;the source learning task TS is the self-supervised learning method used to train the large-scale pre-trained model;and the target learning task TD is the UAV inspection image processing task,which involves subtasks such as image classification,target recognition,and semantic segmentation.Fine-tuning the pre-trained vision model for UAV transmission line inspection image analysis is an inductive transfer learning task.In this study,the purpose of deep inductive transfer learning is to use the source domain Ds and source learning task TS (a large-scale electric power vision pre-trained model) to perform the target learning task TD on the target domain DT.

Deep transfer techniques have been widely used in the electric power field.Ma et al.[51] used deep transfer learning techniques for the small-sample training of substation power equipment component detection models.Yang et al.[52] conducted a study on deep transfer learning techniques for transmission line fault phase selection models.Zhou et al.[53] proposed a transfer learning-based cable tunnel rust identification algorithm.It is worth noting that all the aforementioned studies employed convolutional networks for migration learning.However,as shown in the pre-trained model performance comparison in Tables 1 and 2,pre-trained models based on the Transformer architecture achieved better performance than those based on deep convolutional networks.The focus of this study is therefore primarily on deep migration learning techniques based on Transformer pre-trained models.

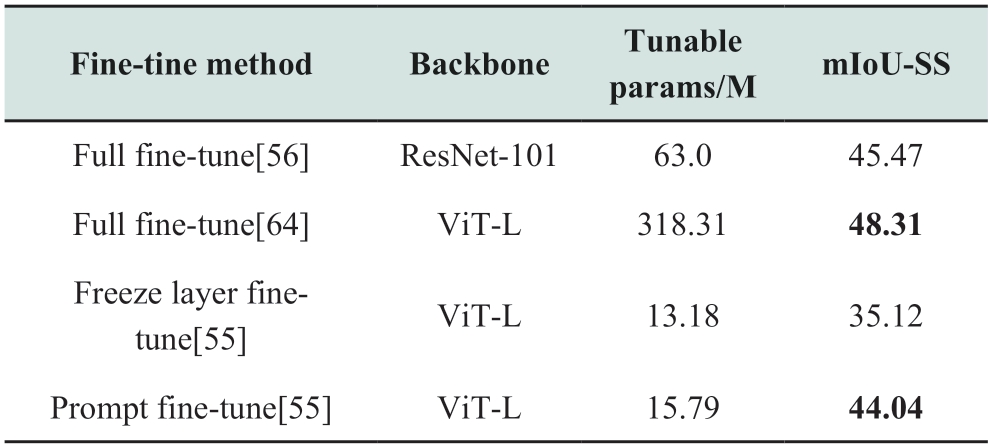

Inductive transfer learning is primarily used to fine-tune pre-trained Transformer models and is broadly categorized as a model-based transfer learning technique [50].Inductive transfer techniques consist mainly of model parameter control strategies for transfer learning problems.The three primary methods used for this purpose are the full [23],frozen layer [54],and prompt fine-tuning methods [55].

The electric power vision large-scale pretrained model architecture comprises the four components of the encoder,decoder,neck,and head.Before executing the model parameter control strategy,the encoder and decoder in the pre-trained model are used as the backbone network (backbone) for target recognition [4] and semantic segmentation [56].During updates,the stochastic gradient descent series method is used to fine-tune all the parameters in the model backbone network,neck,and head to perform updates in ViT-FRCNN [57],TNT [58],PVT [59],and YOLOS [60].Although full fine-tuning provides the best performance for the target task,it requires a large number of labeled samples for training to reduce model overfitting.Additionally,this approach requires the most computing power and storage resources and the longest training time owing to the larger number of model parameters that need to be updated.Frozen layer fine-tuning exploits the fact that the backbone network has the strongest generalization ability and can adapt to multiple target tasks,whereas the neck and head have the weakest generalization ability and high specificity and can only be adapted for the discovery of specific tasks [54].This method is widely used in the fine-tuning of computer vision models with convolutionbased architectures in which the parameters of the backbone network are frozen without updates or adjustments,and only the model parameters in the neck and head are updated[54; 61; 62].While frozen layer fine-tuning requires the adjustment of the least number of model parameters,the model performance is significantly degraded compared to full fine-tuning in the transfer learning of vision models based on the Vision Transformer architecture [55].Prompt fine-tuning is a fine-tuning method for vision models based on the Transformer architecture.This approach involves freezing the majority of the parameters and inserting lightweight trainable modules into the backbone network[55; 63].To enhance the adaptability of the backbone network to the target domain and task,the bias terms in the modules can be modified [61] or additional learnable parameters can be added to transform the visual features [62].These methods require relatively low computational power,storage costs,and number of samples.

The PVT [59] method [59] is used as an example to illustrate the principle of prompt fine-tuning in detail.In a ViT model with n layers [23],the image is divided into m fixed-size image blocks {Ij∈R3×h×w|j∈N,1≤j≤m}where h and w are the height and width of the image blocks,respectively,and each image block and its position information are input into a d-dimensional linear layer for encoding:

We define the i+1th transformer layer (Li+1)as the encoding of a batch of image blocks Ei={eij∈Rd|j∈N,1≤j≤m},which is input to layer i+1 together with the annotation information as a token.The entire ViT model can then be expressed as

where xi∈R denotes the annotation information in the Li+1 layer,[·,·] denotes information stitching,and the last layer Head is the output of the annotation information in the Nth layer xN into annotation information such as rectangular boxes,segmented regions,and image categories,which can be rendered on the image y.

Given a pre-trained ViT model,a set of p d-dimensional consecutive embeddings (i.e.,cues) is introduced into the input space after the embedding layer in the VPT method.During tuning,only the task-specific cues are updated,while the Transformer backbone is kept frozen.There are two variants of the VPT approach comprising VPT-shallow and VPT-deep,which are applicable for different numbers of Transformer layers.

In the VPT-shallow method,the cue tokens are inserted only into the first Transformer layer L1.Each cue token is a learnable d-dimensional vector.Denoting the set of p cue tokens as P={pk∈Rd|k∈N,1≤k≤p},the VPTshallow method can be expressed as

where Zi∈Rp×d represents the feature information captured by the ith Transformer layer.In the VPT-shallow method,L1,x0,and Li are frozen,and P and Head(·) are updateable for training.

In the VPT-Deep method,cues are introduced into the input space of each Transformer layer.Denoting the set of input learnable cues in each i+1 layer Li+1 as Pi={pik∈Rd|k∈N,1≤k≤m},the VPT-Deep method can be expressed as

in which Li is frozen while Pi-1 and Head(·) are updatable for training.

Table 3 illustrates the effects of different fine-tuning methods on pre-trained models using the ADE20k dataset[34].Full fine-tuning resulted in the highest dataset accuracy but required significant computational power and longer fine-tuning times because of the need to adjust a larger number of parameters.Prompt fine-tuning,in which only 5% of the full-fine tuning parameters were adjusted,resulted in slightly degraded model performance.

Table 3 Performance of pre-trained models with different finetuning methods on ADE20k semantic segmentation dataset

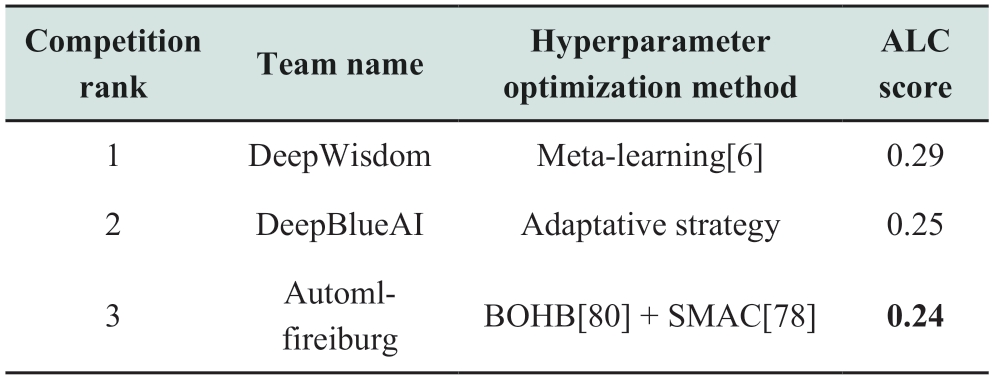

Table 4 Hyperparameter optimization methods in AutoML competition [79]

In summary,full fine-tuning is suitable for scenarios with ample effective training samples,whereas prompt fine-tuning is more appropriate for situations with fewer effective training samples.For downstream tasks with small and difficultly recognized targets and a small number of effective training samples,such as the identification of power lines and rusting in metal tools,prompt fine-tuning should be used for model construction.In contrast,for several other downstream tasks that involve large targets and for which a significant number of effective training samples are available,such as bird nest and transmission equipment body identification,full fine-tuning should be employed for model construction.

4.2 Knowledge distillation

Knowledge distillation [65] is a model compression technique that involves a teacher-student training structure.In the automated training of lightweight edge UAV inspection image processing models,large pre-trained vision models can be considered as teachers that provide knowledge.Knowledge is distilled from these pre-trained vision models to train lightweight and efficient edge model architectures for complex vision models at the expense of a slight performance loss.The approaches for knowledge distillation can be categorized according to the type of knowledge migrated into output,intermediate,and relational feature distillation approaches.

Output feature knowledge refers to the feature vector output from the last layer of a pre-trained electric power vision model.The primary objective of output feature knowledge distillation [65; 66] is to allow a lightweight edge model to simulate the final prediction of the pre-trained model directly.Output feature knowledge distillation,being the simplest approach,is widely used in various applications and tasks.However,it has some limitations such as a heavy reliance on the output from the last layer.This makes it challenging for a side-side model to learn feature knowledge from the middle layer of the electric power vision model,which is crucial for enhancing model performance.

Deep neural networks can learn multilayer feature representations effectively by increasing the network depth.XXX his is referred to as representation learning [67].the feature outputs of both the last and intermediate layers of large-scale electric power vision models can serve as knowledge for supervised lightweight side-model training.In this study,intermediate feature knowledge refers to the intermediate layer output of a pre-trained vision model.Intermediate feature knowledge distillation [68] is an extension of output feature knowledge distillation that provides particular advantages for training lightweight edge networks.Although intermediate feature knowledge transfer provides favorable information for training the lightweight edge model,the selection of effective custom prompt layers built from the pre-trained vision model and bootstrap layers from the edge model for the specific task is a crucial consideration.

Both output and intermediate feature knowledge distillation are based on the outputs of specific layers in a large-scale pre-trained vision model.In relational feature knowledge distillation,the relationships between the data samples and different layers are further explored.Building upon traditional knowledge transfer methods,which involve only individual knowledge distillation,Yim et al.[69] proposed a solution process flow (FSP) to calculate the Gram matrix between two layers and summarize the relationships between pairs of feature mappings.The FSP matrix is calculated using the inner product of the features in the two layers.Liu et al.[70] proposed a robust and effective knowledge distillation method using instance relational graphs that convey the relationships between instance features,instance relations,and feature space transformations across layers.Although there are already a number of techniques for knowledge distillation based on relational features,further research is required to model the relational information in feature graphs or data samples as knowledge effectively.

Knowledge distillation has already been employed in the electric power field for various applications.Zhenbing et al.[71] proposed a transmission line bolt defect image classification method in which dynamic supervised knowledge distillation was used to achieve a balance between model accuracy and resource consumption.Qi [72]et al.[72] proposed an optimal knowledge transfer wideresidual network for transmission line bolt defect image classification.However,both of these studies focused on knowledge distillation in CNNs.Knowledge distillation in large-scale pre-trained visual models and lightweight networks in the electric power field remains to be explored.

5 Automated machine learning

Although the current pre-train + fine-tuning deep learning paradigm has facilitated the construction of deep neural networks for specific tasks,fine-tuning pretrained models still requires much human expertise.This poses difficulties because electric power line inspection managers lack knowledge of deep learning and thereby hinders the broad adoption and application of deep learning in the electric power field.Automated machine learning /deep learning (AutoML / DL) is a promising solution for building deep learning models without human intervention.AutoML / DL techniques can be classified into the four major categories of automated sample processing,automatic feature engineering,hyperparameter optimization,and neural network architecture search [11].Because the first two categories are primarily used for automated machine learning,the focus in this study is on the latter two categories of hyperparameter optimization and neural network architecture search.

5.1 Hyperparameter optimization

Retraining the pre-trained electric power vision model for different UAV image processing tasks is a critical step in downstream task adaptation.Each retraining entails adjusting the training hyperparameters based on the downstream task.Because the selected hyperparameters have a significant effect on the effectiveness of model training [63],automated hyperparameter optimization is an essential component in automated deep learning systems.

Hyperparameter optimization methods can be classified into the four categories of random sampling,Bayesian optimization,gradient optimization,and heuristic methods.Random sampling methods include lattice search and random search [63].In lattice search [73],the hyperparameter search space is divided into regular intervals and the optimal points at the endpoints of each interval evaluated to determine the best-performing point.This process is computationally expensive and unsuitable for large hyperparameter search spaces.In contrast,in random search [74],alternative points in the hyperparameter search space are randomly selected and the best-performing point is selected through evaluation.In Bayesian optimization methods such as the probabilistic agent model and collection function,the deep learning hyperparameter search problem is treated as a black-box problem in the determination of the optimal point in the hyperparameter space [75].Unfortunately,Bayesian optimization cannot be performed in parallel,and its complexity increases with the dimensionality of the hyperparameter search space.Although gradient optimization methods,such as those based on model gradient information [76],can improve the hyperparameter search efficiency,they are applicable only to continuous and microscopic hyperparameters; therefore,their scope of application is relatively narrow.Heuristic methods such as genetic and particle swarm optimization algorithms have the advantages of being parallelizable and usable in non-continuous parameter spaces; however,they may not always yield stable or optimal results [77; 78].

Liu et al.[79] hosted an AutoDL competition in 2019.The top three teams utilized hyperparameter optimization methods to fine-tune models for optimal performance under limited computational resources.The first-place DeepWisdom team applied meta-learning [6] to pre-develop several sets of hyperparameter templates.The second-place DeepBlueAI team used an adaptive parameter construction method to adjust the parameters based on the image size.The third-place Autom-fireiburg team applied the Bayesian optimization method BOHB [80] and heuristic method SMAC [78] to search for hyperparameters.

In conclusion,the computing resources available to the system must be considered in determining the choice of hyperparameter optimization methods used in automated deep learning systems.When only limited computational resources are available,meta-learning using pre-developed sets of hyperparameter templates can be employed to achieve optimal automated fine-tuning.In contrast,when computing power is not a constrain,Bayesian optimizationtype hyperparameter search methods or population search-type algorithms can be employed for automatic hyperparameter tuning.

5.2 Neural network architecture search

Because knowledge distillation requires a carefully designed lightweight and efficient edge model (student network),the architecture of the edge model needs to be constructed automatically using neural network architecture search (NAS).NAS is one of the most popular AutoML techniques for automatically identifying deep neural models and adaptively learning the appropriate deep neural structures.Successful knowledge transfer in knowledge distillation depends not only on the knowledge of the teacher but also on the architecture of the student.However,a capacity gap may exist between large teacher models and small student models,which makes it challenging for students to learn well from their teachers.To address this problem,we employ architecture-based knowledge distillation and NAS to identify the appropriate student architecture [81].

NAS techniques can be classified into the four primary categories of techniques based on reinforcement learning[82],evolutionary algorithms [83],gradient descent[84],and alternative models [85].Techniques based on evolutionary algorithms and gradient descent are effective under different computing power environments.In evolutionary algorithm-based NAS,each alternative edge neural network architecture is treated as an individual in a population.The edge neural network architectures generated in each generation are selected through an evolutionary process.Crossover and mutation operations are performed on superior architectures to generate the next-generation population.This process is repeated until the architecture with the best performance is generated or the maximum number of evolution iterations is reached.Although evolutionary algorithms with parallel execution and high adaptability have been used to search for knowledge distillation student networks in some applications [86],their search results may be unstable and their execution speeds are relatively slow.

The edge model search spaces in reinforcement learning-,evolutionary algorithm-,and alternative modelbased NAS are discrete,non-minimizable,and require a large number of alternative edge model architectures to be evaluated during the search process.In contrast,in gradient optimization-based NAS,the edge model architecture is converted into a minimizable space and optimized to reduce the time required for training numerous networks.However,this method is prone to overfitting,which can make the edge UAV inspection model too biased towards achieving a fast runtime or high accuracy and result in instability.Gradientbased NAS has also been utilized to search for knowledge distillation student networks [87].

In general,when there is sufficient computing power for training,it is more appropriate to use evolutionary algorithm-based NAS to determine the edge model network architecture.However,in scenarios where computing power is limited and it is necessary to construct the automated model quickly,it is more appropriate to employ gradientbased NAS.

6 System architecture

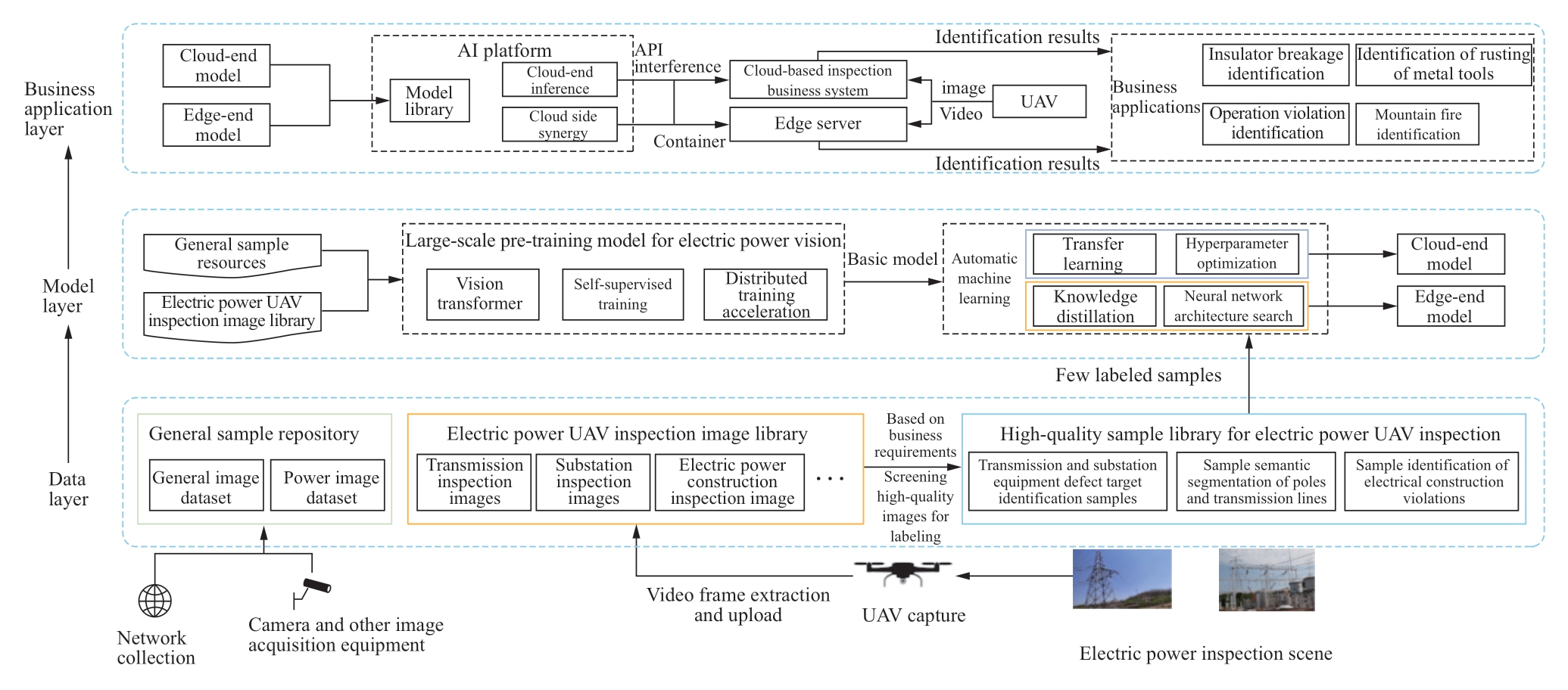

Based on the system design principles and key technologies discussed above,we developed the system architecture for an automated deep learning system used for power line inspection image analysis and processing shown in Fig.4.This system comprises three layers,namely,the data,model,and application layers.

The data layer comprises the three main components of a universal image resource library,electric power drone inspection image library,and electric power drone inspection high-quality sample library.The universal image resource library stores a vast collection of image samples acquired from various sources such as networks and cameras.These image samples include diagrams of different types of electric power equipment,images of electric power operation scenes,and the ImageNet dataset.Despite the large size of the universal image resource library,its quality is inconsistent,and it is primarily used to provide foundational knowledge related to electric power tasks for the large-scale pre-trained model.The electric power drone inspection image library comprises original images obtained by UAVs during electric power inspection assignments.These images are classified based on their respective electric power scenarios and used for pre-training the large-scale electric power vision model.In contrast,the electric power drone inspection high-quality sample library comprises a comparatively smaller number of samples labeled and organized with key information such as the electric power equipment defects found in the electric power done inspection image library.These samples are classified based on specific electric power tasks and used for customizing the tuning of the base model.

The model layer is the heart of the automated deep learning system.A large-parameter model pre-trained on either public datasets or unlabeled electric power data is used as the backbone in this layer to extract image features.The electric power vision pre-trained model is further finetuned using carefully labeled data from UAV inspection data to achieve image analysis and processing capabilities.

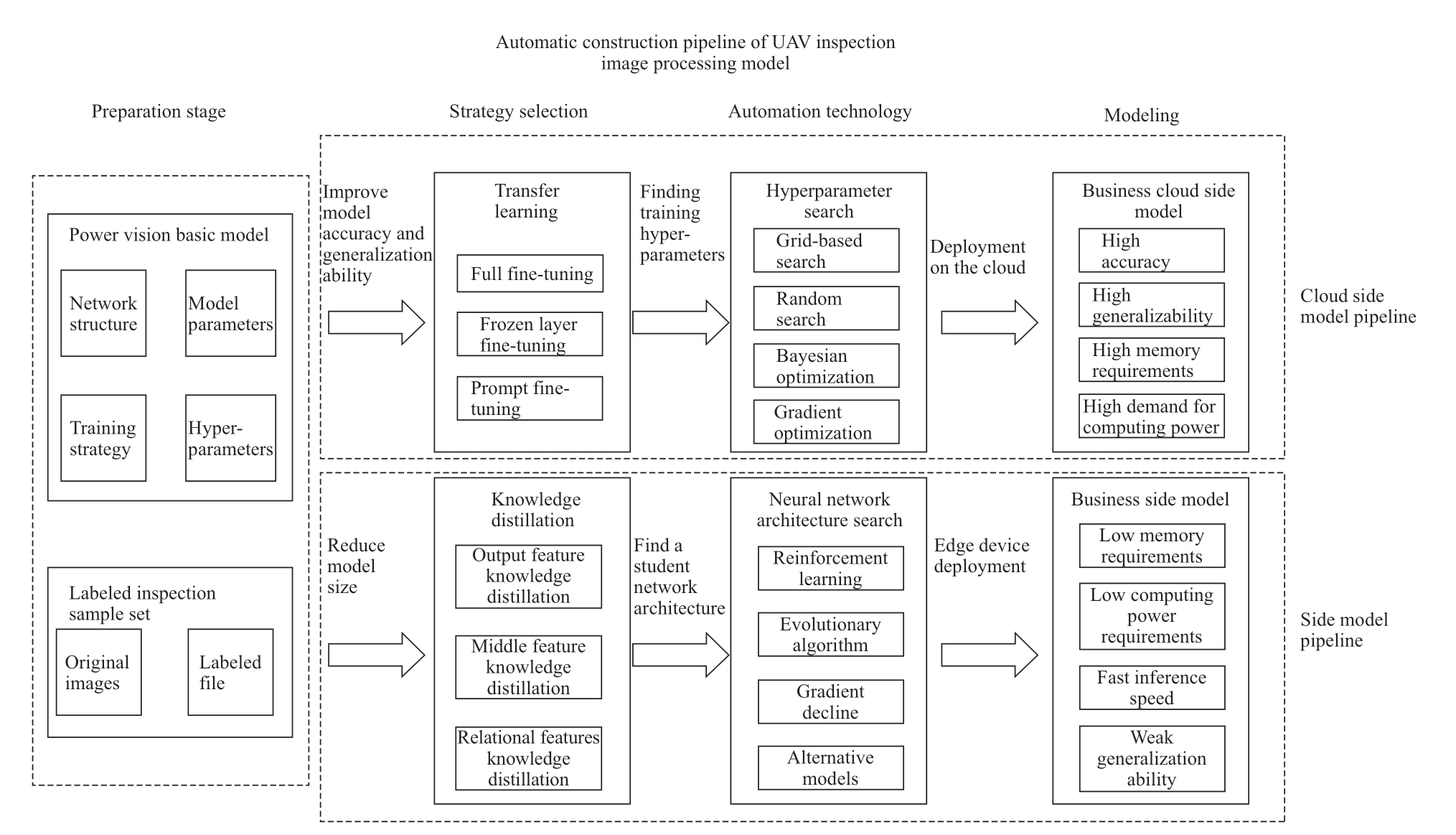

The impossible triangle theory of large-scale pretrained models [88] states that the three desirable attributes of a moderate size,strong generalization ability,and high accuracy in downstream tasks after adjustment cannot be achieved simultaneously in model application and deployment and only two of these attributes can be achieved simultaneously at most.Therefore,the two automated deep learning model pipelines for UAV inspection image processing models shown in Fig.5 are presented in this study.The first pipeline is specifically designed for the automated construction of cloud deep learning models for UAV inspection image processing tasks that do not require real-time feedback such as insulator breakage and metal tool corrosion identification.Model accuracy and generalization ability are prioritized in this pipeline through the use of labeled samples based on fine-tuning methods at the cost of giving up the requirements for fast inference and low memory and computational power demands for model edge deployment.Hyperparameter optimization is utilized to replace manual labor and expert experience by automating the determination of the training hyperparameters in migration learning.

Fig.5 Automatic deep learning model pipelines for power line inspection image analysis and processing

For UAV transmission line inspection image processing tasks that require real-time analysis such as bird nest and forest fire recognition,the deployed model is typically hosted on an edge server.Hence,a second pipeline is designed to construct an edge deep learning model.NAS is first employed to search for lightweight student model architectures.Knowledge distillation and labeled inspection samples are then used to transfer knowledge from the largescale pre-trained electric power vision model (teacher network) to the student network to reduce the scale of the model while maintaining downstream task accuracy in UAV transmission line inspection image processing tasks as much as possible.

Both the cloud and edge models built automatically by the model layer are utilized in the application layer to fulfill the requirements of the UAV transmission line inspection image processes.In the first step,the cloud and edge models are uploaded to the model library of the electric power AI platform for unified management.Subsequently,either application programming interface services are provided to the cloud transmission line inspection platform by the model service publishing module or the cloud-edge management module is used to deploy the model by using a container on the edge server to support electric power inspection and security supervision applications.

7 Prototype system

To verify the feasibility of the automated deep learning system for the transmission line inspection image processing model,an automated deep learning prototype system was developed.The ResNet architecture was pretrained on ImageNet and utilized as the backbone for the cloud deep learning model pipeline.In the fine-tuning stage,frozen-layer fine-tuning was implemented for deep transfer learning and Bayesian optimization was used to automate hyperparameter optimization without expert intervention.

The automatic deep learning prototype system was integrated into the electric power artificial intelligence(AI) platform and implemented on a graphics processing unit (GPU) server cluster managed by Kubernetes with CentOS 7.6 as the operating system.The initial system requirements are a GPU server cluster with a minimum of 22 CPU cores,32 GB of memory,two Nvidia V100 GPUs,and 500 GB of hard-disk space.In this study,bird nest and insulator self-explosion recognition were used as the UAV image processing tasks to verify the effectiveness of the automatic deep learning module.All the experiments were conducted in a style="vertical-align: middle; text-align: center;">

Table 5 Hyperparameter optimization result for bird nest recognition and insulator detonation recognition tasks

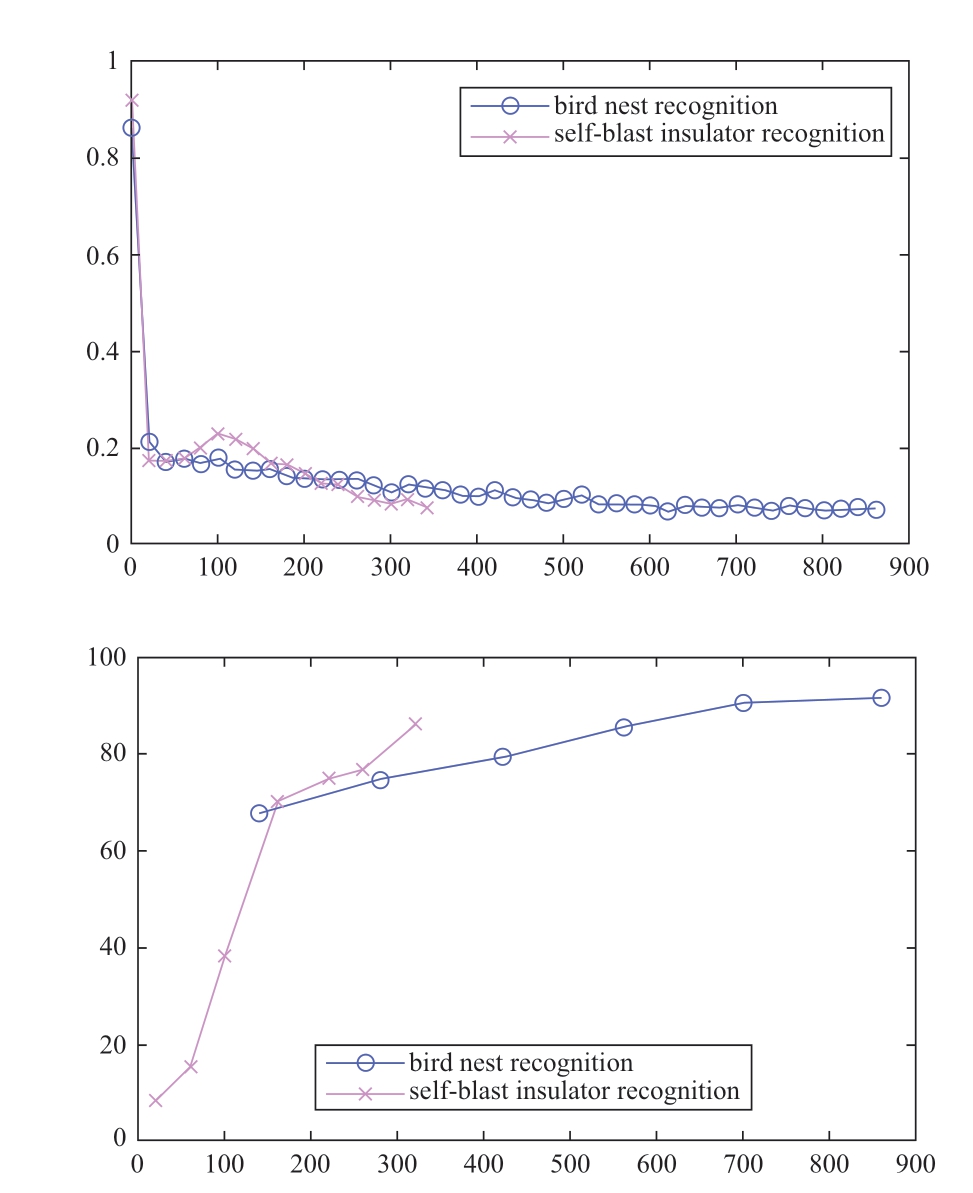

Model training progress is illustrated in Fig.6.The upper graph depicts the loss value change of the bird’s nest identification model and the insulator self-detonation model over the number of training iteration steps,while the lower graph shows the mean average precision (mAP)index change of both models on the verification set over the number of training iteration steps.We note that the loss value of both models decreases as the number of training iteration steps increases,and the mAP index of the verification set also increases with the number of training iteration steps.The final mAP value on the verification set for the bird’s nest recognition model trained by the automatic module is 91.36%,and the mAP value for the insulator self-detonation model trained by the automatic module is 86.13%.

Fig.6 The training progress for deep learning model automatically construct by system

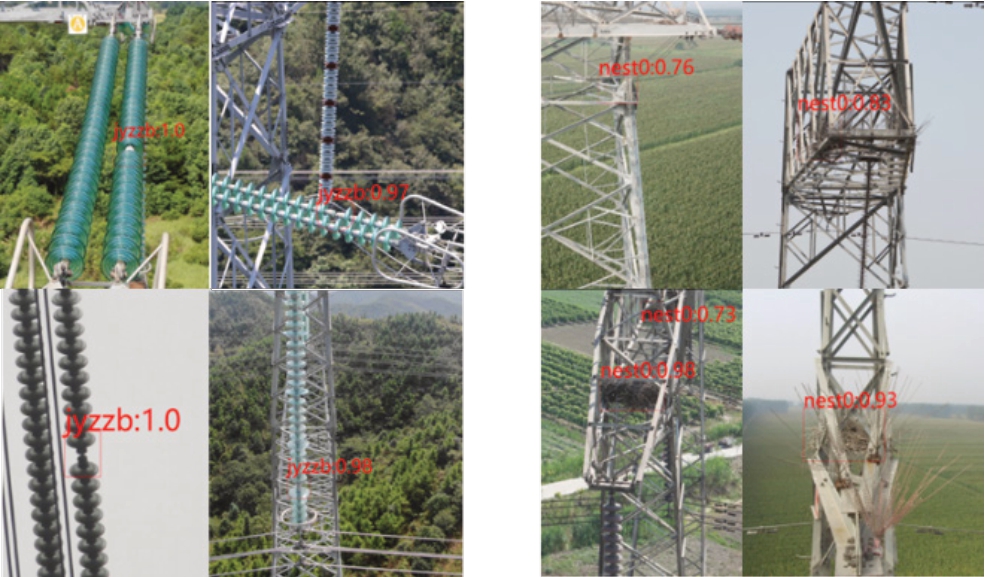

After finishing training,the model are published to electric AI platform as service.Output results of the model service are depicted in Fig.7,illustrating the model’s accurate detection of insulator self-detonation and bird nest identification.

Fig.7 The output of deep learning model automatically construct by system

8 System application

The automated deep learning system proposed in this paper can be used for remote processing of UAV inspection images and local processing of UAV inspection images at the edge server.Remote processing of UAV inspection images includes insulator defect detection,power tower and auxiliary facilities detection,and hardware defect detection.While there is no high requirement for real-time processing speed,due to the large amount of model application and adjustment and the wide coverage area,high model accuracy and generalization performance are required.Additionally,frequent model updates and adjustments are necessary.In this scene,the automated deep learning system for electric UAV inspection image processing ensures high accuracy and generalization performance by constructing a largescale pre-trained model for electric vision tasks and utilizing hyperparameter optimization methods for automatic update and adjustment of the model.In such scenarios,the system solves the problem of few-shot learning problem and effectively improves the efficiency,accuracy,and generalization performance of the model.

UAV inspection image edge processing includes power line recognition,transmission corridor online monitoring,multi-class power equipment recognition,and classification,etc.In these scenarios,real-time image processing is crucial.UAV inspection images can be uploaded to the edge device/server for local analysis and judgment,enabling obtaining of analysis and judgment results in real-time.In this context,the automated deep learning system for electric UAV inspection image analysis transfers the image feature extraction ability of a large-scale pre-training model as knowledge to the edge model (student model).Neural network architecture search technology is used to search for a lightweight and efficient side model architecture.This improves the data processing speed of the model and avoids interference of complex backgrounds on model accuracy.The model can be deployed on edge devices.

9 Conclusion

The development of deep learning models for UAV transmission line inspection has been hindered by small scope of application,high R&D costs,and long R&D period.This paper presents an architecture and analyzes key technologies of an automated deep learning system for electric power UAV inspection image processing to address these issues.Initially,we outline the application of intelligent transmission line inspection by UAV and illustrate the application requirements of an automated deep learning system for transmission line UAV image processing.We then summarize the design difficulties involved and propose three design principles: generalization capability,extensibility,and automation.Next,this paper reviews the pre-training,fine-tuning,and automatic machine learning technologies closely related to these three design principles.Subsequently,we design the architecture of the automated deep learning system for UAV transmission line inspection image processing,build a prototype system,and verify the feasibility of the prototype system on two tasks: bird nest recognition and insulator self-detonation recognition.Finally,we discuss the application scenarios of the automatic deep learning system for transmission line UAV image processing.

Although the automated deep learning system for UAV transmission line inspection image processing proposed in this paper provides a path to solve the problems of small scope of application,high R&D cost,and long R&D cycle for UAV transmission line inspection image processing based on deep learning,it requires annotated data for updating in the fine-tuning stage,which takes a long time to train transmission line inspection workers to complete data annotation.The next developmental goal of the automated deep learning system for UAV transmission line inspection image processing is to enable friendly,interactive communication between transmission line inspection workers and UAV transmission line inspection image processing models to facilitate efficient interaction.

Acknowledgements

This work was supported by Science and Technology Project of State Grid Corporation “Research on Key Technologies of Power Artificial Intelligence Open Platform” (5700-202155260A-0-0-00).

Declaration of Competing Interest

We declare that we have no conflict of interest.

References

[1] LeCun Y,Bengio Y,Hinton G (2015) Deep learning.Nature,521(7553): 436-444

[2] Liu Y,Li J,Xu W,et al.(2017) A method on recognizing transmission line structure based on multi-level perception.Image and Graphics: 9th International Conference,512-522

[3] Pan C,Cao X,Wu D (2016) Power line detection via background noise removal.2016 IEEE Global Conference on Signal and Information Processing

[4] Ling Z,Qiu RC,Jin Z,et al.(2018) An accurate and real-time self-blast glass insulator location method based on faster R-CNN and U-net with aerial images.CSEE Journal of Power and Energy Systems,5(04): 474-482

[5] Victoria A H,Maragatham G (2021) Automatic tuning of hyperparameters using Bayesian optimization.Evolving Systems,12(1): 217-223

[6] Huisman M,van Rijn J N,Plaat A (2021) A survey of deep metalearning.Artificial Intelligence Review,54(6): 4483-454

[7] Doke A,Gaikwad M (2021) Survey on automated machine learning (AutoML) and meta learning.International Conference on Computing Communication and Networking Technologies(ICCCNT)

[8] Huo Y,Prasad G,Lampe L,et al.(2019) Smart-grid monitoring:enhanced machine learning for cable diagnostics.IEEE International Symposium on Power Line Communications and its Applications (ISPLC)

[9] Syed D,Refaat S S,Abu-Rub H,et al.(2019) Averaging ensembles model for forecasting of short-term load in smart grids.IEEE International Conference on Big Data (Big Data

[10] Mendes H A (2021) On AutoMLs for short-Term solar radi)ation forecasting in Brazilian northeast.International Conference on Engineering and Emerging Technologies (ICEET)

[11] He X,Zhao K,Chu X.(2021) AutoML: a survey of the state-ofthe-art.Knowledge-Based Systems,212,106622

[12] Jena B,Nayak G K,Saxena S (2022) Convolutional neural network and its pretrained models for image classification and object detection: a survey.Concurrency and Computation:Practice and Experience,34(6),e6767

[13] Zhang C,Zhang M,Zhang S,et al.(2021).Delving deep into the generalization of vision transformers under distribution Shifts.Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition,(7277-7286)

[14] Huang X,Bi N,Tan J (2022) Visual transformer-based models:A survey.International Conference on Pattern Recognition and Artificial Intelligence.Cham: Springer International Publishing,2022

[15] Jing L,Tian Y (2021) Self-supervised visual feature learning with deep neural networks: A survey.IEEE Transactions on Pattern Analysis and Machine Intelligence,43(11): 4037-4058

[16] Liu Q,Jiang Y (2022) Dive into big model training.arXiv:2207.11912

[17] Liu CY,Wu YQ (2022) Research progress of transmission line visual inspection method based on deep learning.Chinese Journal of Electrical Engineering,1-24

[18] Chatfield K,Simonyan K,Vedaldi A,et al.(2014) Return of the devil in the details: delving deep into convolutional nets.Proceedings British Machine Vision Conference

[19] He K,Zhang X,Ren S,et al.(2016) Deep residual learning for image recognition.2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

[20] Tan M,Le Q (2019) EfficientNet: Rethinking model scaling for convolutional neural networks.International Conference on Machine Learning

[21] Vaswani A,Shazeer N,Parmar N,et al.(2017) Attention is all you need.Advances in neural information processing systems,30

[22] Wu X,Xia Y,Zhu J,et al.(2022) A study of BERT for contextaware neural machine translation.Machine Learning,111(3):917-935

[23] Dosovitskiy A,Beyer L,Kolesnikov A,et al.(2021) An image is worth 16x16 words: Transformers for image recognition at scale.International Conference on Learning Representations

[24] Russakovsky O,Deng J,Su H,et al.(2015) ImageNet large scale visual recognition challenge.International Journal of Computer Vision 115 (2015): 211-252

[25] Yuan L,Chen Y,Wang,T,et al.(2021) Tokens-to-token ViT:Training vision transformers from scratch on ImageNet.2021 IEEE/CVF International Conference on Computer Vision (ICCV)

[26] Liu Z,Lin Y,Cao,Y,et al.(2021).Swin transformer:Hierarchical vision transformer using shifted windows.2021 IEEE/CVF International Conference on Computer Vision (ICCV)

[27] Guo J,Han K,Wu H,et al.(2022) CMT: Convolutional neural networks meet vision transformers.2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

[28] Yuan L,Hou Q,Jiang Z,et al.(2023) VOLO: Vision outlooker for visual recognition.IEEE Transactions on Pattern Analysis and Machine Intelligence,45(5): 6575-6586

[29] Bengio Y,Lecun Y,Hinton G (2021) Deep learning for AI.Commun.ACM,64(7): 58-65

[30] He K,Chen X,Xie S,et al.(2021) Masked autoencoders are scalable vision learners.Proceedings of the IEEE/CVF conference on computer vision and pattern recognition

[31] Liu X,Zhang F,Hou Z,et al.(2020) Self-supervised learning:Generative or contrastive.IEEE transactions on knowledge and data engineering,35.1 (2021): 857-876

[32] Van Den Oord A,Kalchbrenner N,Kavukcuoglu K (2016) Pixel recurrent neural networks.Proceedings of The 33rd International Conference on Machine Learning,Proceedings of Machine Learning Research

[33] Van Den Oord A,Dieleman S,Zen H,et al.(2016) WaveNet: A generative model for raw audio.arXiv: 1609.03499.Retrieved September 01,2016

[34] Zhao T,Lee K,Eskenazi M (2018) Unsupervised discrete sentence representation learning for interpretable neural dialog generation.Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)

[35] Liu Y,Ma J,Xie Y,et al.(2022) Contrastive predictive coding with transformer for video representation learning.Neurocomputing,482,154-162

[36] He K,Fan H,Wu Y (2020) Momentum contrast for unsupervised visual representation learning.2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

[37] Chen T,Kornblith S,Norouzi M,et al.(2020) A simple framework for contrastive learning of visual representations.arXiv: 2002.05709.Retrieved February 01,202

[38] Zhang G,Tu E,Cui D (2017) Stable and improved generative adversarial nets (GANS): A constructive survey.2017 IEEE International Conference on Image Processing (ICIP)

[39] Gulrajani I,Ahmed F,Arjovsky M,et al.(2017) Improved training of wasserstein GANs.arXiv: 1704.00028.Retrieved March 01,2017

[40] Ren S,He K,Girshick R,et al.(2017) Faster R-CNN: Towards real-Time object detection with region proposal networks.IEEE Transactions on Pattern Analysis and Machine Intelligence,39(6): 1137-1149

[41] Carion N,Massa F,Synnaeve G,et al.(2020) End-to-end object detection with transformers.In A.Vedaldi,H.Bischof,T.Brox,J.-M.Frahm,Computer Vision - ECCV 2020 Cham

[42] Chen M,Wang YZ,Dai Y,et al.(2022) SaSnet: Real-time powerline segmentation network based on self-supervised learning.Chinese Journal of Electrical Engineering,42(04):1365-1375

[43] Wang DL,Sun J,Zhang TY,et al.(2022) Improved generative adversarial network based self-exploding defect detection method for glass insulators.High Voltage Technology,48(03): 1096-1103

[44] Ramesh A,Pavlov M,Goh G,et al.(2021) Zero-shot textto-image generation.Proceedings of the 38th International Conference on Machine Learning

[45] Li M,Andersen D G,Smola A J,et al.(2014) Communication efficient distributed machine learning with the parameter server.Advances in Neural Information Processing Systems,2014,27

[46] Yu M,Tian Y,Ji B,et al.(2022) GADGET: Online resource optimization for scheduling Ring-All-Reduce learning jobs.IEEE INFOCOM 2022 - IEEE Conference on Computer Communications

[47] Li S,Zhao Y,Varma R,et al.(2020) PyTorch distributed:Experiences on accelerating data parallel training.Proc.VLDB Endow.,13(12),3005-3018

[48] Huang Y,Cheng Y,Bapna A,et al.(2018) GPipe: Efficient training of giant neural networks using pipeline parallelism.arXiv: 1811.06965.Retrieved November 01,2018

[49] Bian Z,Liu H,Wang B,et al.(2021) Colossal-AI: A unified deep learning system for large-scale parallel training.arXiv:2110.14883.Retrieved October 01,2021

[50] Zhuang F,Qi Z,Duan K,et al.(2021) A comprehensive survey on transfer learning.Proceedings of the IEEE,109(1),43-76

[51] Ma P,Fan YF.(2020) Small sample intelligent substation power equipment component detection based on deep migration learning.Electric Power Grid Technology,44(03): 1148-1159

[52] Yi Y,Dong CF,Hao RY,et al.(2020) Deep transfer learningbased phase selection model for transmission line faults and its mobility.Electric power automation equipment,40(10): 165-172

[53] Zi QZ,Yang J,Ye S,et al.(2019) Rust recognition algorithm of cable tunnel based on transfer learning convolutional neural network.China Electric Power,52(04): 104-110

[54] Yosinski J,Clune J,Bengio Y,et al.(2014) How transferable are features in deep neural networks? Advances in Neural Information Processing Systems (NIPS)

[55] Jia M,Tang L,Chen BC,et al.(2022) Visual prompt tuning.In S.Avidan,G.Brostow,M.Cissé,G.M.Farinella,T.Hassner,Computer Vision - ECCV 2022 European Conference on Computer Vision,Cham

[56] Chen LC,Zhu Y,Papandreou G,et al.(2018) Encoderdecoder with atrous separable convolution for semantic image segmentation.In V.Ferrari,M.Hebert,C.Sminchisescu,Y.Weiss,Computer Vision - ECCV 2018 European Conference on Computer Vision,Cham

[57] Samplawski C,Marlin B M (2021) Towards transformerbased real-time object detection at the edge: A benchmarking study.MILCOM 2021 - 2021 IEEE Military Communications Conference (MILCOM)

[58] Han K,Xiao A,Wu E,et al.(2021) Transformer in transformer.Advances in Neural Information Processing Systems (NIPS),34,15908-15919

[59] Wang W,Xie E,Li X,et al.(2021) Pyramid vision transformer:A versatile backbone for dense prediction without convolutions.2021 IEEE/CVF International Conference on Computer Vision(ICCV)

[60] Fang Y,Liao B,Wang X,et al.(2021) You only look at one sequence: Rethinking transformer in vision through object detection.Advances in Neural Information Processing Systems(NIPS)

[61] Zhang R,Isola P,Efros A A (2016) Colorful image colorization.Computer Vision - ECCV 2016 European Conference on Computer Vision,Cham

[62] Noroozi M,Favaro P (2016) Unsupervised learning of visual representations by solving jigsaw puzzles.In B.Leibe,J.Matas,N.Sebe,M.Welling,Computer Vision - ECCV 2016 European Conference on Computer Vision,Cham

[63] Houlsby N,Giurgiu A,Jastrzebski S,et al.(2019) Parameterefficient transfer learning for NLP.Proceedings of the 36th International Conference on Machine Learning,Proceedings of Machine Learning Research

[64] Zheng S,Lu J,Zhao H,et al.(2021) Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers.IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

[65] Hinton G,Vinyals O,Dean J (2015) Distilling the knowledge in a neural network.arXiv: 1503.02531.Retrieved March 01,2015

[66] Kim S,Kim H E.(2017) Transferring knowledge to smaller network with class-distance loss ICLR (workshop)

[67] Bengio Y,Courville A,Vincent P (2013) Representation learning: A review and new perspectives.IEEE Transactions on Pattern Analysis and Machine Intelligence,35(8): 1798-1828

[68] Passban P,Wu Y,Rezagholizadeh M,et al.(2021) ALP-KD:Attention-based layer projection for knowledge distillation.Proceedings of the AAAI Conference on Artificial Intelligence

[69] Yim J,Joo D,Bae J,et al.(2017) A gift from knowledge distillation: Fast optimization,network minimization and transfer learning.2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

[70] Liu Y,Cao J,Li B,et al.(2019) Knowledge distillation via instance relationship graph.IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

[71] Zhen BZ,Xiong JC,City Q,et al.(2021) Defect image classification of transmission line bolts based on dynamic supervised knowledge distillation.High Voltage Technology,47(02): 406-414

[72] City Q,Xiong JC,Zhen BZ,et al.(2021) Optimal knowledge transfer wide residual network bolt defect image classification of transmission lines.Chinese Journal of Image and Graphics,26(11): 2571-2581

[73] Hesterman J Y,Caucci L,Kupinski M A,et al.(2010) Maximumlikelihood estimation with a contracting-grid search algorithm.IEEE Transactions on Nuclear Science,57(3): 1077-1084

[74] Bergstra J,Bengio Y (2012) Random search for hyper-parameter optimization.J.Mach.Learn.Res.,13(1): 281-305

[75] Shahriari B,Swersky K,Wang Z,et al.(2016) Taking the human out of the loop: A review of Bayesian optimization.Proceedings of the IEEE,104(1): 148-175

[76] Maclaurin D,Duvenaud D,Adams R (2015) Gradient-based hyperparameter optimization through reversible learning.Proceedings of the 32nd International Conference on Machine Learning,Proceedings of Machine Learning Research

[77] Lorenzo P R,Nalepa J,Kawulok M,et al.(2017) Particle swarm optimization for hyper-parameter selection in deep neural networks.Proceedings of the Genetic and Evolutionary Computation Conference,Berlin,Germany

[78] Hutter F,Hoos H H,Leyton-Brown K (2011) Sequential modelbased optimization for general algorithm configuration.In C.A.C.Coello,Learning and Intelligent Optimization International Conference on Learning and Intelligent Optimization,Berlin,Heidelberg

[79] Liu Z,Pavao A,Xu Z,et al.(2021).Winning solutions and postchallenge analyses of the ChaLearn AutoDL Challenge 2019.IEEE Transactions on Pattern Analysis and Machine Intelligence,43(9): 3108-3125

[80] Falkner S,Klein A,Hutter F (2018) BOHB: Robust and efficient hyperparameter optimization at scale.Proceedings of the International Conference on Machine Learning

[81] Liu Y,Jia X,Tan M,et al.(2020).Search to distill: Pearls are everywhere but not the eyes.IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

[82] Zoph B,Le Q V (2017) Neural architecture search with reinforcement learning.International Conference on Learning Representation.Irwan B,Zoph B,Vijay V,et al.(2017) Neural optimizer search with reinforcement learning.In Proceedings of the 34th International Conference on Machine Learning,70: 459-468

[83] Elsken T,Metzen J H,Hutter F (2019) Efficient multi-objective neural architecture search via Lamarckian Evolution.International Conference on Learning Representations

[84] Shin R,Packer C,Song D (2018) Differentiable neural network architecture search.International Conference on Learning Representations,Shin R,Packer C,Song D.(2018) Differentiable neural network architecture search.In 2018 International Conference on Learning Representations (ICLR 2018 Workshop)

[85] Domhan T,Springenberg J T,Hutter F (2015) Speeding up automatic hyperparameter optimization of deep neural networks by extrapolation of learning curves.International Joint Conferences on Artificial Intelligence

[86] Li C,Peng J,Yuan L,Wang G,et al.(2019) Blockwisely supervised neural architecture search with knowledge distillation.arXiv: 1911.13053

[87] Peng H,Du H,Yu H,et al.(2020) Cream of the crop: Distilling prioritized paths for one-shot neural architecture search.Advances in Neural Information Processing Systems (NIPS),eng H,Du H,Yu H,et al.(2020) Cream of the crop: Distilling prioritized paths for one-shot neural architecture search.Advances in Neural Information Processing Systems (NIPS),33: 17955-17964

[88] Zhu C,Zeng M (2022) Impossible triangle: What’s next for pretrained language models? CoRR,abs/2204.06130

Received: 3 February 2023/ Accepted: 13 July 2023/ Published: 25 October 2023

✉Daoxing Li

lidaoxing@epri.sgcc.com.cn

Xiaohui Wang

wangxiaohui@epri,sgcc,.com.cn

Jie Zhang

18161273371@163.com

Zhixiang Ji

jizhixiang@epri.sgcc.com.cn