0 Introduction

Statistics indicate that more than half of electric power accidents are caused by unsafe behaviors of workers.To reduce the incidence of power accidents and number of casualties caused by them, many experts have carried out extensive research and standardized the safety behavior of workers in detail.However, because of the numerous instructions specified in the operation manual, staff are often unable to work according to them.In particular, accidents caused by illegal operations may still occur under conditions such as those of fatigue and sudden weather changes.In the operation inspection of electric power; however, onsite supervision of personnel is usually adopted, but this traditional manual method cannot reduce the accident rate.Therefore, it is important to assess the risk behavior through a computer vision algorithm using artificial intelligence technology to quickly and accurately determine the risk behavior of personnel and reduce the accident rate and casualties of electric power.From a technical viewpoint, these safety risk behaviors can be divided into two categories.The first category is that part or all of the limbs enter the dangerous area, and the second is that the operation steps violate the operating procedures.This paper proposes an algorithm that can accurately identify the keypoints of each part of the human body, raise an alarm when the human body approaches a dangerous area, anddirectly solve the aforementioned identification problem of the first type of safety risk behavior.The above-mentioned identification problem of the second type of safety risk behavior can be solved by inputting the key points into the behavioral algorithm.

In terms of safety risk behavior detection of power maintenance personnel, most extant applications entail personnel-crossing detection based on a single frame and human posture detection based on a single frame [1-5].In the single frame processing mode, the human posture detection has achieved good results in the laboratory environment [6-10].Even if an image sequence is used,most applications simply convert the video flow into a single-frame image and then use a single-frame image algorithm for processing.Image sequence processing technology has the advantages of single-frame image processing as well as the ability to reuse interframe information between multiple frames.At present, there are many combine-application directions related to image sequence; therefore, these combine-application problems have also been widely investigated.The combination of image sequence processing technology and target-extraction technology can realize the tracking of moving objects in the image sequence.In other words, each independently moving target object is marked in the initial frame of the image sequence or the frame in which the target first appears, and these targets are located in the subsequent frames.Moving object tracking technology in the image sequence not only provides the motion trajectory of the monitored object but also provides a reliable data source for 2D and 3D scene reconstruction to perform motion analysis of the moving object.The combination of image sequence processing and super-resolution reconstruction can also become a problem in image sequence super-resolution restoration.Image sequence super-resolution restoration can not only use the prior information of objects and the information of a single image but also use the correlation between different images to supplement each other’s information.It has better superresolution restoration ability than a single image.Therefore,image sequence processing is widely used in many fields such as optical remote sensing image processing,video and multimedia image processing, medical image processing, astronomical image processing, and intelligent transportation.They have remarkable application prospects and market potential.Intelligent recognition technology based on image sequences has become important for the application of artificial intelligence technology.

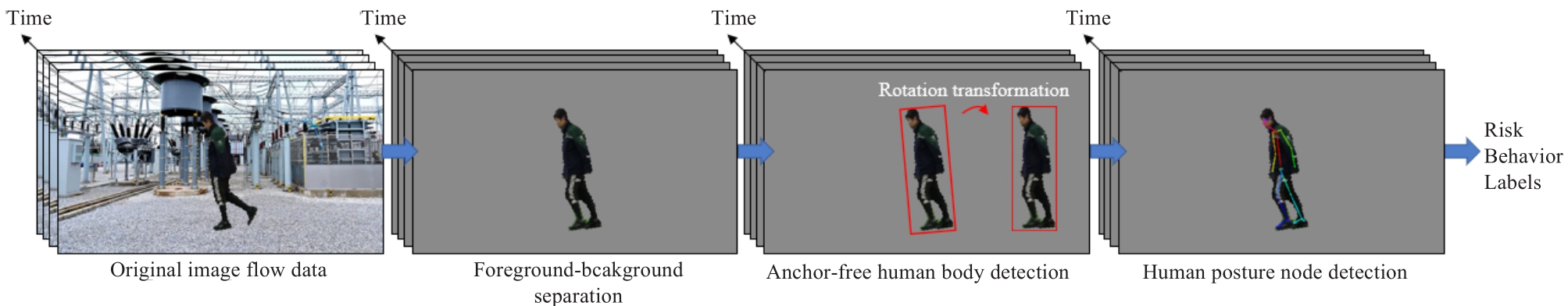

However, in various applications, the interframe information mining in image sequences is not sufficient.Most applications simply convert video flow into singleframe images and process them frame-by-frame.They only use the sequence information between multiple frames and discard others, which considerably reduces the application value of the image sequence, particularly in the safety risk behavior detection of power operation inspectors.Singleframe processing methods do not satisfy the accuracy requirements.In addition, the insufficient use of interframe information causes difficulties in the accurate recognition of the image background.Complex backgrounds often cause significant interference to the target recognition algorithm and reduce the recognition accuracy for application scenarios with complex backgrounds.The improvement speed of the recognition accuracy based on a single frame slows down significantly.The previous algorithm based on image stream “only uses the sequence information between multiple frames” and does not make full use of the invariant characteristics of the background between multiple frames of the image stream to achieve the separation of foreground and background.Separation of the foreground and background is crucial for human pose detection.Therefore,this paper proposes a foreground and background separation method based on image sequences, as shown in Fig.1.Research on making full use of interframe information to improve the accuracy of target recognition will have significant application value in the safety risk behavior detection of power operation inspectors.

Fig.1 Diagram of image sequence-based risk behavior detection of power maintenance personnel

1 Foreground–background separation of monitoring image sequence for power maintenance personnel

The traditional foreground-background separationmethod distinguishes between the foreground and background according to the pixel gray value and connectivity in a monotonous color [11-15].The algorithm according to the pixel gray value and connectivity in a monotonous color has achieved good results in the laboratory environment [16-19].However, the background is usually complex power equipment in a power operation inspection scene.In extreme cases, there may also be other elements such as people, vehicles, machinery, and natural landscapes in the background.Therefore, the background cannot be simply separated from the pixels in a singleframe image in a power operation inspection scene.The characteristics of the fixed background and dynamic foreground should be fully considered when removing the background.

The image sequences are collected from the monitoring video of power operation and inspection personnel under the same power operation and maintenance scene, divided into N images, arranged in chronological order, and defined as image sequence![]() Each element in

Each element in ![]() is pulled into a column vector, and all vectors are arranged together in chronological order to obtain matrix D=■■unfold ( X ), unfold ( X ), … ,unfold ( X )■■ ∈Rn1n2×N.

is pulled into a column vector, and all vectors are arranged together in chronological order to obtain matrix D=■■unfold ( X ), unfold ( X ), … ,unfold ( X )■■ ∈Rn1n2×N.

1 2N Because the background information in the image sequence![]() is fixed; therefore, matrixDcan be considered as the superposition of background information matrix B and foreground information matrix F, where the background information matrix meetsrank(B)≤cand the foreground information matrix meets‖F‖1≤ k.‖⋅‖1denotes the sum of the absolute values of the matrix entries.

is fixed; therefore, matrixDcan be considered as the superposition of background information matrix B and foreground information matrix F, where the background information matrix meetsrank(B)≤cand the foreground information matrix meets‖F‖1≤ k.‖⋅‖1denotes the sum of the absolute values of the matrix entries.

Therefore, the problem of separating the foreground information (personnel) and background information (scene)of the monitoring video of power maintenance personnel can be expressed as the following optimization problem:

where‖⋅‖∗denotes the nuclear norm of a matrix (i.e., the sum of its singular values) and λ is a positive weighting parameter.The physical significance of the equation is as follows: to solve foreground F and background B under the condition that the minimum value of the equation is satisfied, the sum of foreground F and background B is required to be equal to the original image D.

The robust principal component analysis (RPCA)problem can be solved by the exact augmented Lagrange multiplier (ALM) algorithm proposed by Lin, Chen, and Ma[20].The background information matrix B and foreground information matrix F are solved, and the column vector in the foreground information matrix F is restored to the foreground information image sequence{ F ∈ R n1 ×n2 }N.The

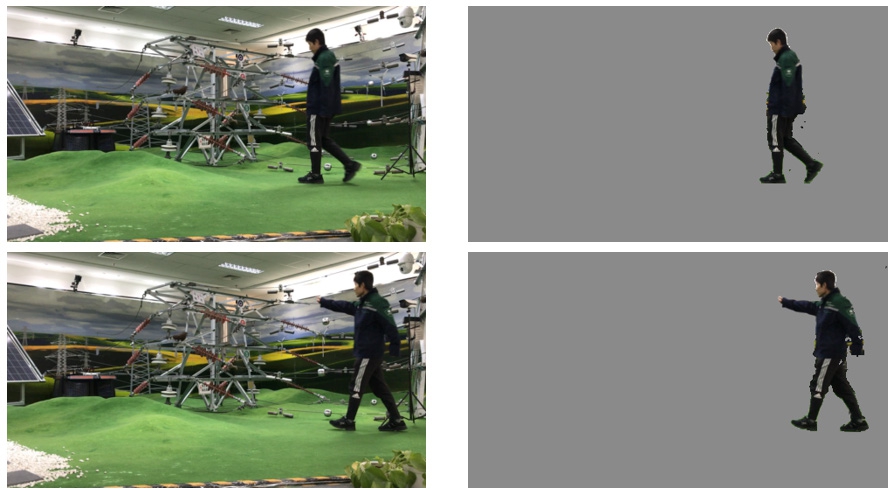

i i =1 video of the operation site is processed to obtain images before and after separation, as shown in Fig.2.

Fig.2 Diagram of foreground-background separation

The proposed background removal algorithm maximally utilizes multi-frame information and thus achieves an excellent background removal effect, and the environment after background removal is processed in a single frame.

2 Realization of risk behavior detection of power maintenance personnel

The traditional human posture recognition algorithm is sensitive to the angle deviation between the camera equipment layout and work-site image.To address this problem, we propose a human body posture recognition method for power operation inspectors based on an anchorfree frame that uses a separated foreground information image sequence![]() to detect the safety risk behavior of power maintenance personnel.

to detect the safety risk behavior of power maintenance personnel.

2.1 Anchor-free human body detection

To analyze the foreground information image sequence,we propose the design of a directed anchor-free detection frame:G1: Fi → P i , where Pi∈ is the directed detection result of the power maintenance personnel.The directed anchor-free detection frame G1 consists of the feature extractor G1,1 and multi-task detection head G1,2.

is the directed detection result of the power maintenance personnel.The directed anchor-free detection frame G1 consists of the feature extractor G1,1 and multi-task detection head G1,2.

Feature extractor G1,1 adapts a parallel structure.First,the input image is passed into a convolution layer with a 7 × 7 convolution kernel to reduce the size of the feature map.Next, the feature map is passed into another convolution layer with a 3 × 3 convolution kernel to roughly extract features.The feature map then goes through two branches:the feature extraction of the keypoint branch and extraction of the prediction feature branch.The first branch adapts the atrous convolution network, and the second utilizes ResNet.Finally, the feature extractor merges the feature maps of these two branches.The result of multiscale prediction is Pi∈ Rli×5×3.

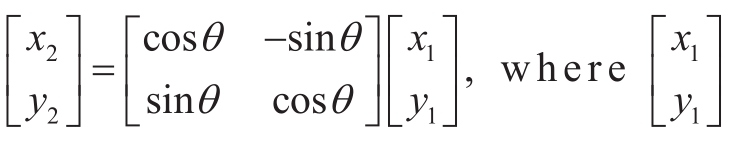

We express the detection result as a directionaladaptive anchor box.In this case, we denote one power operation inspection person in the single frame as Pij ={x ij, y ij, w ij, hij, θ ij }, where xij and yij are the x- and y-coordinates of the center point of the detection box,respectively.wij and hij are the weight and height of the detection box, respectively, and θij is the clockwise rotation angle of the detection box around the center.A detection result is shown in Fig.3.

Fig.3 Diagram of anchor-free human body detection

2.2 Rotation transformation

Referring to the value of the anchor box Pij, we further apply the rotation transformation ![]() on the detected targetperson.Thus, wecan obtain thecorrect persondetection imageQij∈

on the detected targetperson.Thus, wecan obtain thecorrect persondetection imageQij∈ .There are many ways to rotate transformations [21-24], and we used the most efficient one among them.The rotation transformation is given as

.There are many ways to rotate transformations [21-24], and we used the most efficient one among them.The rotation transformation is given as is the coordinate after the rotation transformation.Correspondingly,

is the coordinate after the rotation transformation.Correspondingly,![]() is the coordinate before the rotation transformation.Considering that the values of

is the coordinate before the rotation transformation.Considering that the values of![]() are not integers, they are not accessible to index pixel values in the images.To solve this problem, we rounded the values of

are not integers, they are not accessible to index pixel values in the images.To solve this problem, we rounded the values of![]() up or down.After several permutations and combinations, we obtain four approximate pixel coordinates of

up or down.After several permutations and combinations, we obtain four approximate pixel coordinates of![]() Based on the four approximate pixel coordinates,we can naturally obtain four corresponding pixel values.Because a high-quality image can be obtained by the interpolation method, we used the linear interpolation of the four pixel values as the pixel value of

Based on the four approximate pixel coordinates,we can naturally obtain four corresponding pixel values.Because a high-quality image can be obtained by the interpolation method, we used the linear interpolation of the four pixel values as the pixel value of![]() After the video flow passes through the directed anchor-free detection frame G1 and rotation transformation G2 successively, we can obtain the correct image of the personnel.

After the video flow passes through the directed anchor-free detection frame G1 and rotation transformation G2 successively, we can obtain the correct image of the personnel.

2.3 Human posture node detection

There are several methods for human gesture node detection [25].In this study, we used OpenPose [26]to detect human gesture nodes.The OpenPose detector is denoted as G3.For the right human image Qij (Fig.3),human gesture node detection conducts the following transformation:G3 :Q i j →Sij.Detector G3 consists of feature extractor G31 and key note correlation predictor G32.G31 is composed of a convolution and pooling layer.This adapts to the following structure:

where C3×3represents the 3 × 3 convolution kernel, and P2×2 represents 2 × 2 max pooling.The key node correlation predictor G32consists of six parts in series.The input of the first part is the output of feature extractorG31.For the remaining part, the input is the output of the last part combined with feature extractor G31.By building the network structure in (2), each branch can effectively utilize the local features of the images.In G32, each part consists of two branches with the same structure.One branch predicts the node information, and the other predicts the correlation information among the nodes.The first part is constructed as follows:

and the structure of the remaining part is as follows:

Finally, the network outputs the human gesture key node information Sij.

We define the directed detection model for the gesture nodes of the power maintenance personnel as G : = G1G 2 G 3 :Fi →Si.Thus, we can obtain the gesture node information![]() In this formulation,lis the i number of power maintenance personnel in the single-frame imageF ∈ R n1×n2, andsrepresents the number of gesture

In this formulation,lis the i number of power maintenance personnel in the single-frame imageF ∈ R n1×n2, andsrepresents the number of gesture

i nodes.The final dimension represents the x-coordinate,y-coordinate, and confidence values of the gesture nodes of the power maintenance personnel.After adding the detection results to the original image, the final image is obtained as shown in Fig.4.

Fig.4 Diagram of human posture node detection

2.4 Safety risk behavior Identification

After the human gesture detection network for power maintenance personnel, we concatenate another graph neural network (GNN).The GNN is used to identify the safety risk behavior of power maintenance personnel.Safety riskbehavior is also a training-based approach.We construct a graph using the connection relations among the human key nodes.We then use the classification of the safety risk behavior of power maintenance personnel as a graph label.Thus, the training data are obtained.Next, we use the data to train our GNN modelH:S→P.The classification result of the safety risk behavior for power maintenance personnel is![]() In this formulation,Tis the total number of personnel identified in the current video flow,nis the personnel serial number, the first dimension of Pnrepresents the behavior code, and the second dimension represents the confidence of behavior identification.The relationship between the human key nodes constructs the graph, which is the input of the GNN.The structural diagram of the GNN model is presented in Fig.5.In the GNN, all human keypoints are linked to each other.We use the keypoint sequence as the input of the GNN network and calculate the link weight between the keypoints through the training method based on gradient descent.After ensuring the time sequence of the graphs, we can apply the GNN model to learn the pattern of personnel behavior.Using this GNN model, we can classify personnel behavior.Finally, we can classify the safety risk behavior of power maintenance personnel.

In this formulation,Tis the total number of personnel identified in the current video flow,nis the personnel serial number, the first dimension of Pnrepresents the behavior code, and the second dimension represents the confidence of behavior identification.The relationship between the human key nodes constructs the graph, which is the input of the GNN.The structural diagram of the GNN model is presented in Fig.5.In the GNN, all human keypoints are linked to each other.We use the keypoint sequence as the input of the GNN network and calculate the link weight between the keypoints through the training method based on gradient descent.After ensuring the time sequence of the graphs, we can apply the GNN model to learn the pattern of personnel behavior.Using this GNN model, we can classify personnel behavior.Finally, we can classify the safety risk behavior of power maintenance personnel.

Fig.5 GNN model structure diagram (not all links between nodes in S are shown)

3 Simulation experiments and result analysis

3.1 Simulation experiments

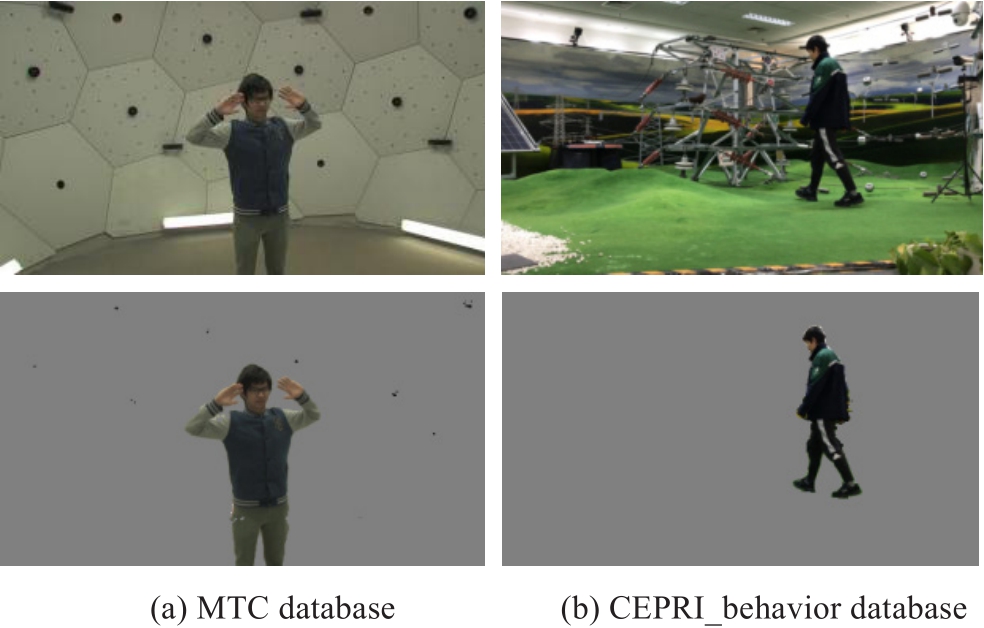

All experiments in this study used Intel(R) Core(TM)i7-3770@3.40 GHz and NVIDIA GeForce RTX 2070@1506 MHz, in OpenPose (i.e., OpenPose version 1.7.0) environments.We carried out simulation experiments of foreground-background separation and risk behavior detection tasks on the MTC and self-built “CEPRI_behavior” datasets, respectively.The MTC dataset is a subset of the Panoptic Studio dataset under the same license.It contains 27784 training data points and 9046 testing data points of a single image.It corresponds to a wide range of body and hand motions on a gray background.The CEPRI_behavior dataset was built by the China Electric Power Research Institute.It contains 1000 training data points and 306 testing data points of an image sequence with 20 frames.Each image sequence illustrates a power maintenance behavior.It includes a correct behavior and three types of illegal behavior.It provides a body posture of 0° to 45° for power maintenance personnel.

The quality of a detector, d0, is its probability of a correct keypoint or PCK, that is, the probability that a predicted keypoint is within a distance thresholdσof its true location.For a particular keypointp, we denote it as  (d0)and approximate it on a testing setTas

(d0)and approximate it on a testing setTas

where ‖⋅‖2denotes the sum of squares of the entire matrix elements for ∈ d 0 (I f)thep -th keypoint prediction on image If and

∈ d 0 (I f)thep -th keypoint prediction on image If and  on its true location, andδ(⋅)is the indicator function.For multiview bootstrapping to succeed, a low false-positive rate is needed in accepting erroneous triangulations as valid.Here, we introduce a slight modification to the PCK index and define the matching threshold as a certain ratio of the head segment length.The specific method involves directly dividing the original PCK value by the head length of the human body.The advantage of this method is that the evaluation index can be adapted to different human body sizes.We denote this metric as the PCKh index and use it to evaluate the effect of the proposed algorithm.Notably, for multiple human scenes,the evaluation of multiple human bodies can be achieved by evaluating the PCKh of each person and then averaging all the PCKh values.

on its true location, andδ(⋅)is the indicator function.For multiview bootstrapping to succeed, a low false-positive rate is needed in accepting erroneous triangulations as valid.Here, we introduce a slight modification to the PCK index and define the matching threshold as a certain ratio of the head segment length.The specific method involves directly dividing the original PCK value by the head length of the human body.The advantage of this method is that the evaluation index can be adapted to different human body sizes.We denote this metric as the PCKh index and use it to evaluate the effect of the proposed algorithm.Notably, for multiple human scenes,the evaluation of multiple human bodies can be achieved by evaluating the PCKh of each person and then averaging all the PCKh values.

3.2 Result analysis

a) Foreground–background separation task

In this experiment, the values of the parameters are as follows: n = 32 and λ = 0.0005.After calculating thebackground, we replaced the pixels in the image with less difference in value from the background image with gray pixels to remove the background.

According to the requirement of separating the foreground and background, the ALM algorithm is used to perform simulation verification, and the simulation experiment is carried out on the MTC dataset and CEPRI_behavior database.The foreground–background separation effect depicted in Fig.6 is good and meets the daily visual experience of people.

Fig.6 Foreground-background separation

b) Risk behavior detection task

In this experiment, the values of the parameters are:epoch = 50, flip_r = 0.8, wd = 0.0001, and ρ = 0.9.The initial learning rate is 0.02, which is reduced by a factor of 10 after 16 and 22 epochs, respectively.We initialize our backbone network with weights pre-trained on ImageNet.The input image is split into 600 × 600 tiles, and the overlapping tile pixels are set to 300 × 300 pixels.

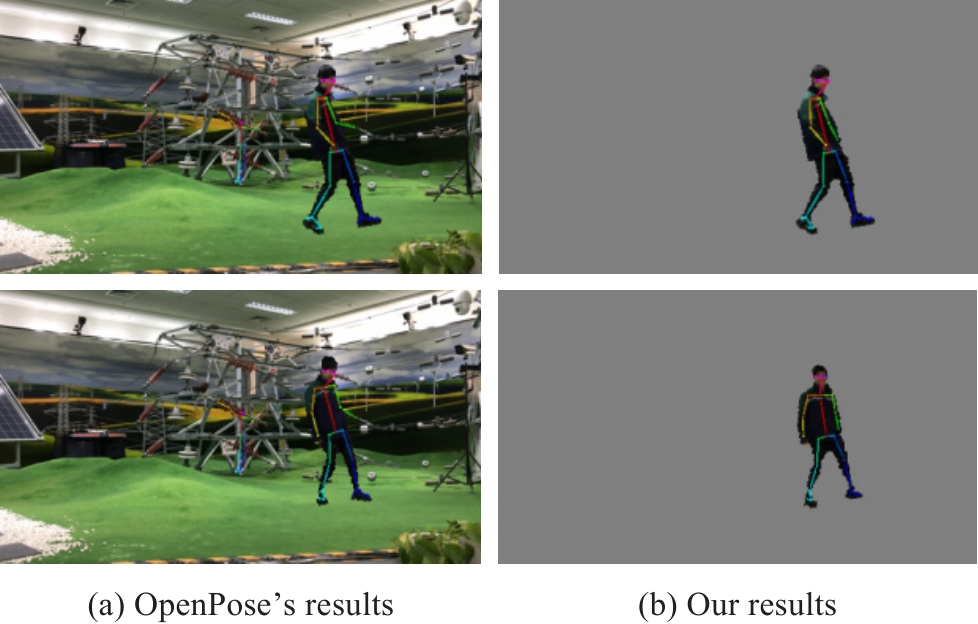

When the background of the image sequence is complex, the background information near the personnel may be misjudged as human posture nodes; this can considerably affect the discrimination accuracy of subsequent risk behaviors.In our own dataset, 77% of the images misidentified other objects as humans, and by using our background removal algorithm, the misrecognition rate was reduced by 77%.To further examine the advantages of our algorithm in addition to reducing the background misidentification, the statistical results of the experiments in this paper removed 77% of the incorrect identifications.Taking the OpenPose algorithm as the control group, it can be observed from Fig.7 that the OpenPose algorithm misjudges the electric power lines near the personnel as its left arm, while the proposed algorithm can avoid the interference of background information because it separates the foreground from the background information.

Fig.7 Comparison of human posture node detection without inclination angle

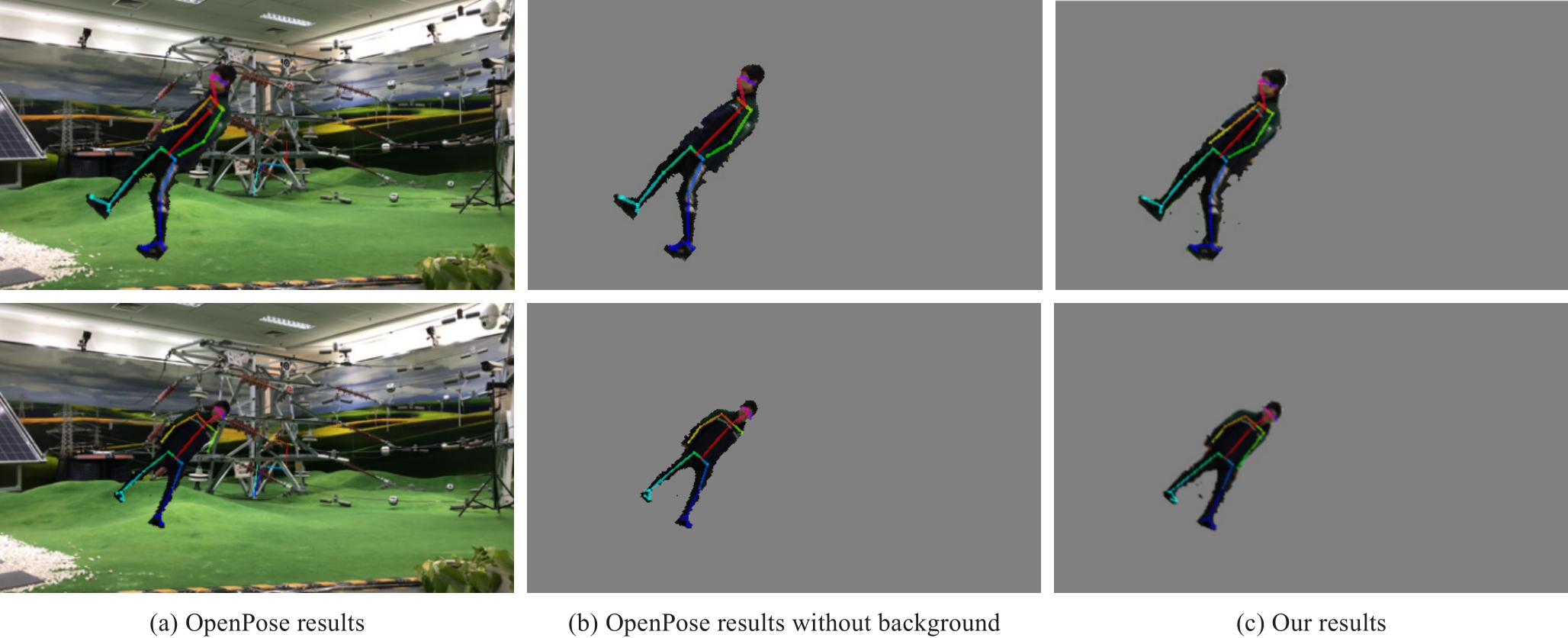

In addition, using the OpenPose algorithm as the control group without considering background information,the algorithm missed the arms of the targeted personnel.The proposed algorithm can avoid the interference of the interpolation angle because it detects the anchor-free frame and converts its rotation to the standard direction.The anchor-free frame box method can sufficiently suppress the problems of false and missed detections.In terms of missed detection, when the “anchor-free frame box” method is not used, the arm of Fig.8 (b) is missed.In addition, our method does not miss the arm, as shown in Fig.8 (c).In terms of false detection, when the “anchor-free frame box” method is not used, the identification deviation of keypoints leads to a reduction in the recognition accuracy.The specific values of this reduction are listed in Table 1.

Fig.8 Comparison of human posture node detection with inclination angle

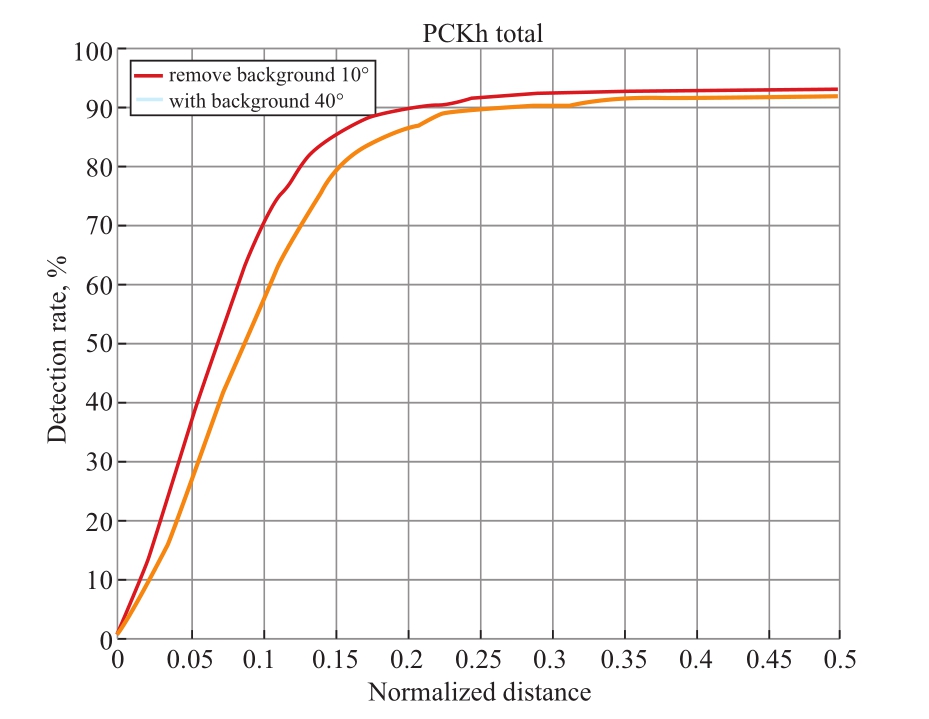

As shown in Fig.9 (a), if there is a 10° inclination angle between the target person and vertical direction, when the normalized distance is larger than 0.25, the accuracy curves of both the algorithms gradually enter the platform range,and tends to be stable at approximately 92%.Although our proposed algorithm has no significant advantage over the traditional OpenPose algorithm in terms of accuracy, it is superior in the accuracy curve as a whole.

As shown in Fig.9 (b), if there is a 40° inclination angle between the target person and vertical direction, when the normalized distance is larger than 0.35, the accuracy curves of the two algorithms gradually enter the platform range, and tends to be stable at approximately 91.5% and 86.5%, respectively.Therefore, the proposed algorithm has a significant advantage over the traditional OpenPose algorithm in terms of accuracy (by approximately 5%).

Fig.9 PCKh index comparison of human posture node detection considering foreground and background information

Furthermore, we focus on the relationship between the inclination angle of the targeted personnel and performance of the proposed algorithm.This demonstrates that the smaller the personnel increase angle, the higher is theaccuracy of human pose node detection, as shown in Fig.10.

Fig.10 PCKh index comparison of human posture node detection considering inclination angle

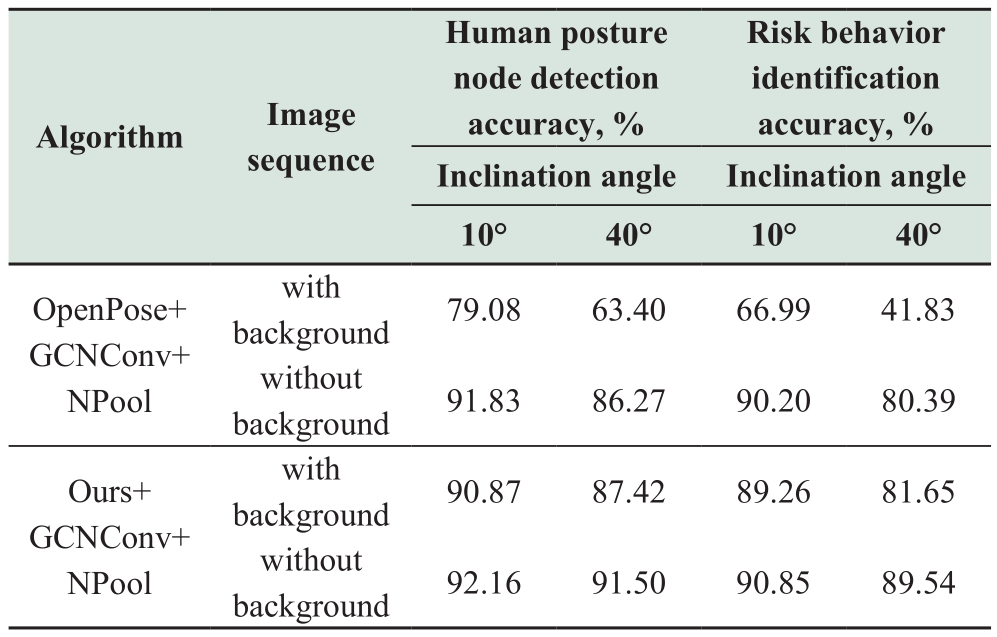

In this study, we used the GCNConv+NPool algorithm for behavior identification.We used a combination of the OpenPose algorithm and the GCNConv+NPool algorithm as the control group to conduct simulation experiments; and the results are shown in Table 1.

In Table 1, “Human posture node detection accuracy”is the PCKh value, and “Risk behavior identification accuracy” is the correct rate of behavior classification,that is, the number of correct classifications divided by the total number of output classifications.The true value is the value marked by the sample and the predicted value is the model output value.Table 1 indicates that the background information of the image sequence data has little impact on the Ours+GCNConv+NPool algorithm,but there is a significant difference in the accuracy of the OpenPose+GCNConv+NPool algorithm.Additionally, the difference in the risk behavior identification accuracy is larger than that of the human posture node detection accuracy.

Table 1 Comparison of risk behavior identification

Our algorithm can not only reduce the human body misrecognition rate by 77% but also significantly improve the recognition accuracy of human keypoints.Because of this huge accuracy advantage, even if we do not use other behavior classification algorithms for comparison with our chosen behavior classification algorithm, the improvement in the recognition accuracy of human keypoints can directly confirm that our algorithm is effective in improving the final behavior classification accuracy.Therefore, the behavior classification algorithm that we selected was not compared with other behavior classification algorithms.

4 Conclusion

In this paper, a novel image sequence-based risk behavior detection method for power maintenance personnel is proposed.We use the neural network structure to realize a high-precision detection algorithm of the human body keypoints of power inspection personnel.After the neural network structure of the human gesture recognition of the power inspection personnel,the GNN is concatenated to identify the safety risk behavior of the power maintenance personnel.The main innovations presented herein are as follows.

First, a foreground-background separation strategy is proposed.Because of the sensitivity of the traditional human gesture recognition algorithm to the overlapping of foreground and background image information, we present the idea of “fixing background and changing foreground.”We attempted to separate the foreground and background images from the monitoring video of the power inspection personnel.The separated foreground is used to detect the safety risk behavior of power maintenance personnel,thereby improving the detection accuracy.

Second, a human gesture-directed recognition strategy is proposed.Because of the angular deviation between the image captured by the deployed camera equipment and the live image, the traditional human gesture recognition algorithm is sensitive to this deviation.To solve this sensitivity, we propose a human gesture recognition strategy for power maintenance personnel based on an anchorfree frame box.This method can sufficiently suppress the problem of false and missed detections.

Acknowledgements

This study is supported by the project “Research and application of key technologies of safe production management and control of substation operation and maintenance based on video semantic analysis” (5700-202133259A-0-0-00) of the State Grid Corporation of China.

Declaration of Competing Interest

We declare that we have no conflict of interest.

References

[1]Andriluka M, Pishchulin L, Gehler P, et al.(2014) 2D human pose estimation: new benchmark and state of the art analysis.2014 IEEE Conference on Computer Vision and Pattern Recognition, 3686-3693

[2]Belagiannis V, Zisserman A (2017) Recurrent human pose estimation.12th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition, 468-475

[3]Bulat A, Tzimiropoulos G (2016) Human pose estimation via convolutional part heatmap regression.Computer Vision – European Conference on Computer Vision 2016.doi:10.1007/978-3-319-46478-7_44

[4]Gkioxari G, Hariharan B, Girshick R, et al.(2014) Using k-poselets for detecting people and localizing their keypoints.2014 IEEE Conference on Computer Vision and Pattern Recognition, 3582-3589

[5]Insafutdinov E, Pishchulin L, Andres B, et al.(2016) Deepercut:A deeper stronger and faster multi-person pose estimation model.Computer Vision – European Conference on Computer Vision 2016, 34-50

[6]Iqbal U, Gall J (2016) Multi-person pose estimation with local joint-to-person associations.Computer Vision – European Conference on Computer Vision 2016 Workshops, 627-642

[7]Newell A, Yang K, Deng J (2016) Stacked hourglass networks for human pose estimation.Computer Vision – European Conference on Computer Vision 2016, 483-499

[8]Ouyang W, Chu X, Wang X (2014) Multi-source deep learning for human pose estimation.2014 IEEE Conference on Computer Vision and Pattern Recognition, 2337-2344

[9]Pishchulin L, Insafutdinov E, Tang S, et al.(2016) Deepcut: Joint subset partition and labeling for multi person pose estimation.2016 IEEE Conference on Computer Vision and Pattern Recognition, 4929-4937

[10]Wei S-E, Ramakrishna V, Kanade T, et al.(2016) Convolutional pose machines.2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 27-30 Jun

[11]Mazza V, Turatto M, Umiltà C (2005) Foreground-background segmentation and attention: A change blindness study.Psychological Research, 69: 201-210

[12]Ding Y, Jingjing Q, Jihao Y (2017) Image segmentation via foreground and background semantic descriptors.Journal.of Electronic Imaging, 26(5): 053004

[13]Shelhamer E, Long J, Darrell T (2017) Fully convolutional networks for semantic segmentation.IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(4): 640-651

[14]Li Y, Qi H, Dai J, et al.(2017) Fully convolutional instance-aware semantic segmentation.Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2359-2367

[15]Liu Y, Liu J, Li Z, et al.(2013) Weakly-supervised dual clustering for image semantic segmentation.2013 IEEE Conference on Computer Vision and Pattern Recognition, 2075-2082

[16]Tao L, Porikli F, Vidal R (2014) Sparse dictionaries for semantic segmentation.Computer Vision – European Conference on Computer Vision 2014, 549-564

[17]Göring C, Fröhlich B, Denzler J (2012) Semantic segmentation using GrabCut.Proceedings of International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications 2012

[18]Arbeláez P, Hariharan B, Chunhui G, et al.(2012) Semantic segmentation using regions and parts.2012 IEEE Conference on Computer Vision and Pattern Recognition, 16-21, Jun

[19]Csurka G, Perronnin F (2011) An efficient approach to semantic segmentation.International Journal of Computer Vision, 95: 198-212

[20]Lin Z, Chen M, Ma Y (2013) The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices.arXiv preprint arXiv: 1009.5055,2013.doi:10.48550/arXiv.1009.5055

[21]Yan F, Chen K, Salvador E, et al.(2017) Quantum image rotation by an arbitrary angle.Quantum Information Processing, 16: 282

[22]Paeth, AW (1986) A fast algorithm for general raster rotation.In:Proceedings of Graphics Interface and Vision Interface, 77-81

[23]Unser M, Thevenaz P, Yaroslavsky L (1995) Convolution-based interpolation for fast, high-quality rotation of images.IEEE Trans.Image Process.4(10): 1371-1381

[24]Ge P, Wang H (2015) Image rotation and translation measurement based on joint transform correlator.Optik, 126(5):564-569

[25]Fang H-S, Xie S, Tai Y-W, et al.(2017) RMPE: Regional multiperson pose estimation.2017 IEEE International Conference on Computer Vision, 22-29, Oct

[26]Cao Z, Hidalgo G, Simon T, et al.(2021) OpenPose: realtime multi-Person 2D pose estimation using part affinity fields.2017 IEEE Conference on Computer Vision and Pattern Recognition,21-26, Jul

Received: 19 April 2022/ Accepted: 15 September 2022/ Published: 25 December 2022

Changyu Cai

caichangyu@epri.sgcc.com.cn

Jianglong Nie

niejl@gs.sgcc.com.cn

Wenhao Mo

mowenhao@epri.sgcc.com.cn

Zhouqiang He

hezhq@gs.sgcc.com.cn

Yuanpeng Tan

tanyuanpeng@epri.sgcc.com.cn

Zhao Chen

chenzhao@gs.sgcc.com.cn

Biographies

Changyu Cai was born in Hebei, China,in 1983.He received his Master’s degree from Changchun University of Science and Technology, Changchun, China, in 2010.He is working at China Electric Power Research Institute, Beijing, China.His research interests include electric power, artificial intelligence,and computer vision and applications.

Jianglong Nie was born in China in 1973.He is working at State Grid Gansu Electric Power Company, Lanzhou, China.His research interests include electric power, artificial intelligence, and electrical engineering.

Wenhao Mo was born in Shijiazhuang, China in 1996.He received his Master’s degree in Control science and engineering from Harbin Institute of Technology in 2020.Since 2020, he has been working as an engineer in Artificial intelligence application department, China Electric Power Research Institute (CEPRI).His research areas include power equipment inspection, deep learning, and image processing.

Zhouqiang He was born in China in 1981.He is working at State Grid Gansu Electric Power Company, Lanzhou, China.His research interests include electric power, artificial intelligence, and electrical engineering.

Yuanpeng Tan was born in Tangshan, China in 1987.He received his Ph.D.degree in Power information technology from North China Electric Power University in 2017.Currently,he is working as a senior engineer in the Artificial Intelligence Application Department,China Electric Power Research Institute(CEPRI).His research interests include power equipment inspection, knowledge graph, graph computing, and other technologies.

Zhao Chen was born in China in 1988.He is currently working at State Grid Gansu Electric Power Company, Lanzhou, China.His research interests include electric power, artificial intelligence, and electrical engineering.

(Editor Yajun Zou)